Christina Cardoza: Hello, and welcome to the IoT Chat, where we explore the latest developments in the Internet of Things. I’m your host, Christina Cardoza, Editorial Director of insight.tech. And today we’re talking about the Intel® 13th Generation Core™ processors with Jeni Barovian Panhorst from Intel. Jeni, welcome to the show.

Jeni Barovian Panhorst: Thanks so much, it’s great to be here.

Christina Cardoza: So, before we get into the conversation, I know you’ve been at Intel for a long time in a various different roles, so I would love to hear more about yourself and what you do at Intel.

Jeni Barovian Panhorst: Yeah, so I lead the Network and Edge Compute Division in Intel’s Network and Edge Group. So, basically what that means is that I have responsibility for our silicon portfolio that services network infrastructure and edge computing across a number of different sectors. And then also the platform software that unleashes the technology and capabilities within that silicon to service all those exciting use cases. So, really excited to be here today and talk specifically about one of the products, or a couple of the products, in that portfolio, specifically the 13th Generation Intel Core processors.

Christina Cardoza: Absolutely. And I know Intel just made that release at the beginning of the year. And, like you said, you are working in edge roles and network roles, and I think this release is going to be huge for those markets. So I would love to start off the conversation to learn a little bit more about the release—the13th Generation Core processors, codenamed Raptor Lake. But what makes this so exciting for the network and edge markets today?

Jeni Barovian Panhorst: Yeah. So, we’re here to talk about the new 13th Generation Intel Core processors for the IoT edge. And those are really our top choice to maximize performance and memory and IO and edge deployments. And also we want to talk about the new 13th Generation Intel Core mobile processors, which are focused on combining power efficiency and performance and flexibility, as well as industrial-grade features that focus specifically in areas that are important for network and IoT edge, including AI and graphics and ruggedized edge use cases.

And if we look specifically at some of the capabilities of these 13th Gen Intel Core mobile processors, they’re focused on delivering a boost in performance compared to the prior generation, while also offering really a range of options for different power design points. So this allows our customers to get exactly the performance per watt that they’re looking for, in what are often space- and power-constrained deployments. And so what our customers have an opportunity to do—and the solution providers that deliver solutions to those customers—

they’re able to benefit from higher single-threaded performance, higher multi-threaded performance, graphics, AI performance, but they also are really benefiting from increased flexibility to run more applications simultaneously, more workloads and more connected devices, which are very critical at the IoT edge.

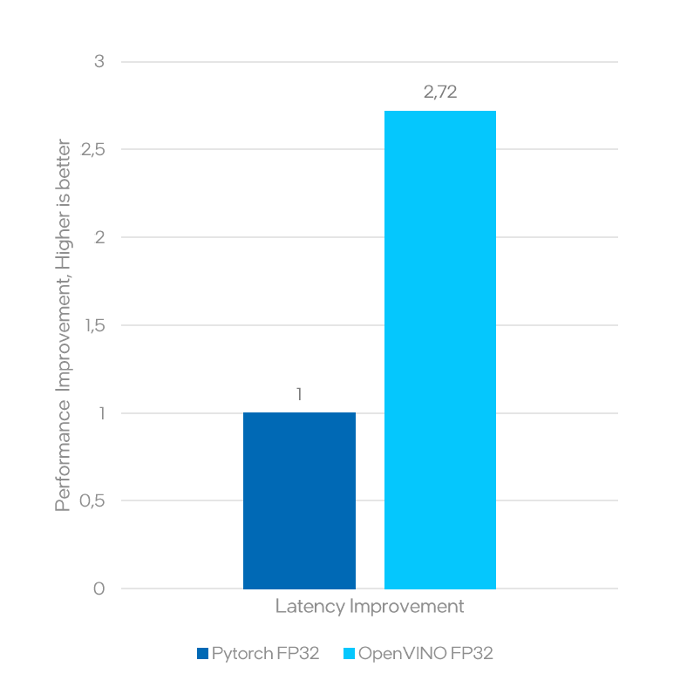

And so I just wanted to talk about a couple of features that are really exciting about these product launches. First of all, we offer up to 14 cores and 20 threads with our performance-hybrid architecture. And we have a technology called Intel® Thread Director that allows us to match our—match the cores specifically to the needs of our customers’ workloads. We also have really great graphics performance. And this is really essential at the edge for use cases like autonomous mobile robotics, optical inspection use cases; and the 13th Gen Intel Core mobile processors, they feature integrated Intel® Iris® Xe graphics with up to 96 graphics execution units for fast visual processing. And that high number of graphics EUs also enables parallel processing for a number of AI workloads. And so then when you combine that with the capabilities of the processor, that has technologies like Intel® DL Boost with VNNI instructions, and combining that with developer tools like the Intel OpenVINO™ toolkit, all of this in combination has an opportunity to further enhance AI inference optimization on Intel-enabled solutions, which help reduce dependence on external accelerators.

Another thing I wanted to talk about was that the 13th Gen Intel Core mobile processors are the first generation of mobile processors to introduce PCI Express Gen 5 connectivity. This is enabled on the H-series SKUs specifically, and actually it was previously already available on the 12th Gen Intel Core processors. So PCIe Gen 5 allows our customers to really focus on deploying more demanding workloads in more places, because there’s a much bigger data pipeline and the ability to provide faster, more-capable connections to a variety of different peripherals.

And, last but not least, I talked a little bit about industrial-grade features. The 13th Gen Intel Core mobile processors also are focused on really redefining industrial intelligence by bringing flexibility and scalability and durability to the edge. And so select SKUs in the portfolio are compliant with industrial-grade use casesthat really is—it allows our customers to operate in 100% utilization over 10 years. Also stringent environments; so they offer extended temperature ranges of -40 °C to 100 °C. And also support for in-band ECC memory to improve reliability of those use cases, and deliver the types of performance and capability that are needed in harsh environments for installations in areas like machine control, AMR, avionics, and other really exciting use cases for the IoT edge.

Christina Cardoza: So, sounds like it’s packed with a lot of great new features and capabilities. You know, I love to hear all the multitasking power. And you mentioned some use cases like autonomous robots; I can just imagine that that’s taking so much power, so many things running behind the scenes and memory to make that happen. So it’s great to see the processes are improving to make sure that things don’t slow down, that performance is improved. And that to handle those more demanding workloads it seems like Intel, you guys are always on top of things, always thinking of what’s next, and always releasing new processes like this.

It feels like the 12th Generation processors were just released, and then you guys updated to the 13th Generation processors. But I think, with these core processors, they actually introduced the hybrid microarchitecture that we saw in the 12th Generation of the release. Can you talk a little bit more about how you guys are utilizing that architecture?

Jeni Barovian Panhorst: Yeah, absolutely. And it is really important to address those complex workloads that you were just talking about just now. And as you said, we introduced that performance-hybrid architecture in 12th Gen, which is really about bringing together the best of two Intel architectures: our Performance cores, or P-cores; and then also our Efficient cores, or our E-cores, on to a single SOC. And so you know, really the primary advantage of bringing this into a single product, this Intel performance-hybrid architecture, is to be able to scale up multi-threaded performance by using these P-cores and E-cores optimally for the workloads at hand.

You know, it’s pretty intuitive that certain multi-threaded application performance scales with the number of cores that are available to them. But, really, that performance scale-up is dependent upon how efficiently a given application is divided into multiple different tasks and the number of available CPUs to deliver that parallel execution of those tasks. So, to cater to that vast array—that vast diversity of client applications and usages of cores—we focused on designing a SOC architecture where the larger cores are utilized and unleashed in performance to go after single-threaded performance and limited-threading scenarios. And then, simultaneously, the efficient cores or the E-cores can help extend scalability of multi-threaded performance over prior generations. So, by putting these together, that’s where we were able to deliver this performance-hybrid technology that achieves the best performance on multi-threaded, as well as limited-threaded and power-constrained workloads.

And, as I mentioned briefly before, that performance-hybrid architecture is coupled with Intel Thread Director, which optimizes performance for concurrent workloads across these P-cores and E-cores. So how that works is that the Thread Director just monitors that instruction mix in real time. And it provides that runtime feedback to the operating system, and it dynamically then provides guidance to the scheduler in the operating system, allowing it to make more intelligent and data-driven decisions on how to schedule those threads.

And so performance threads are prioritized on the P-cores, delivering responsive performance where maybe there aren’t as many limitations in terms of power requirements. And then the E-cores are utilized for highly parallel workloads, and other power-constrained conditions where power might be needed elsewhere in the system, such as the graphics engines that I mentioned earlier, or perhaps other accelerators in the platform. And then combined this delivers the best user experience.

Christina Cardoza: I know that hybrid microarchitecture was really exciting for a lot of people in the last release, so it’s great to see that carryover in this release. And you touched upon a lot of the new capabilities and experiences, but I’m wondering if we can expand a little bit more about what the top capabilities or features, improvements and differences you think users are really going to gain from this release over previous generations.

Jeni Barovian Panhorst: Yeah. So, performance is always top of mind for people. And there are certainly significant gains in the 13th Gen by comparison to the 12th Gen Intel Core processors. So, if we look at performance gains within the same power envelope for the mobile family of products, we’ve got up to 1.08x faster single-threaded performance. In the desktop processors we have up to 1.34x faster multi-threaded performance. And if we look specifically at AI performance, which is so critical for the edge, we’ve got up to 1.25x gains in CPU classification inference workloads. So that’s what we’re seeing in terms of exciting performance gains.

Another area that’s important to our customers is an easy upgrade path. And so these 13th Gen processors are socket compatible with the 12th Gen Intel Core processors to deliver that easy upgrade ability, both for our ecosystem as well as customers who have deployed solutions they have an opportunity to more easily upgrade. I mentioned before PCI Express Gen 5 conductivity. So, in our mobile products it is the first generation to include that to deliver a faster pipeline for more data throughput. A great example of use cases that benefit from that would be medical imaging, which requires a tremendous amount of visual data.

I wanted to talk a little bit about a specific customer example of where we’re seeing improvements, gen-on-gen from 12th Gen to 13th Gen. And one particular company that we’re working with is Hellometer. They’re a great example of a company that is digging into those gen-on-gen performance gains and also achieving platform flexibility in the process. So, Hellometer, what they’re focused on is they are a startup; they have a SaaS solution specializing in AI for restaurant automation. And if you look at what 13th Gen is capable of delivering for their application, they can deliver now more AI performance at the edge cost effectively for their target market, which is fast food restaurants and quick service restaurants.

So if you look at these restaurants, and specifically in the drive-through or consumers in the dining room, time is truly of the essence; it translates directly to revenue for these restaurants. And if a line is too long guests will drive past, or they won’t go back and they’ll find something to eat elsewhere. So that’s why these brands are really focused on utilizing Hellometer’s technology, which is computer vision–based technology. It’s a restaurant-automation solution, and it uses our prior generation of Intel Core mobile processors with the built-in AI acceleration in the processor itself—I mentioned DL Boost and OpenVINO before. So, Hellometer uses those technologies, and as a result of using their solution, these restaurant and franchisee operators can learn how to optimize their guest experience and get those meals out swiftly and build that customer and brand loyalty.

And Hellometer, his CEO joined us for the launch, and he has talked about how the 13th Generation Intel Core processors will enable them to add an extra video stream to their solution, which actually increases their ability to process customer data by over 30% for real-time inferencing without a discrete AI accelerator. It’s just using the technology that is integrated into the processor. And so this is really exciting for us to talk about these examples, because it really gives our customers the ability to win business by better understanding their guests’ experiences, and it delivers innovations that really drive business value. And we’re really excited to partner with our customers in examples like this.

Christina Cardoza: I love hearing all those examples. I mean, we’ve talked about manufacturing, healthcare, retail hospitality—so this is really hitting all sectors and improving businesses across all these different industries. And one thing that I’ve noticed that you’ve mentioned is that these businesses and organizations, they’re just getting smarter and smarter. And so that is having an increase on their network workloads. And everybody wants to move closer to the edge to get those real-time insights, like you were just describing with Hellometer. So I’m wondering, how else do you see the 13th Generation Core processors being able to provide new opportunities, provide new improvements as network workloads get larger, and we just move closer and closer to the edge?

Jeni Barovian Panhorst: Yeah, there’s just an incredible breadth of use cases that we’re supporting, and a lot going on. If we look in military applications, we have an opportunity to support embedded computing for vehicles and aircraft, or edge devices for intelligence and safety and recon. Next-generation avionics with multitasking performance and durability requirements for space-constrained and stringent-use conditions. We’ve got healthcare advancements. If you look at enabling ultrasound imaging, endoscopy, clinical devices—all these different use cases—it’s really a massive amount of visual data that has to be processed.

So customers can really take advantage of multitasking on that performance-hybrid architecture that we talked about—high data throughput that’s enabled through that PCI Express Gen 5, AI tools that enable developers to unleash the power of the underlying silicon to support these imaging workloads and inferencing workloads. And also, when you talk about a lot of these markets, they have very long life cycles for a qualification for certification. And they’re in service for a very long period of time as well. So the long-life availability of our products ensures consistent supply for repairs, for maintenance, and to really drive value from these long life cycles.

I talked a little bit about hospitality when I was talking about the Hellometer example. There’s all kinds of other applications as well, including video walls and digital signage. You know, AI-driven, in-store advertising, interactive flat-panel displays—these can all take advantage of our 13th Generation Core processors to offer a great solution for retail and service and hospitality industries as well. Industrial applications, like AI-based industrial process control, we’re seeing incredible innovation from our partners, leveraging 13th Gen Intel Core processors to really converge powerful compute and AI workloads in situations where you’ve got space constraints and power constraints.

And there’s another example I wanted to talk about in this area, and that is our partner Advantech. They’re focusing specifically in AMRs—which I mentioned before, autonomous mobile robotics—which are truly becoming a new normal in areas like warehousing, logistics, and manufacturing environments. And this market is just growing incredibly quickly. It’s growing over the course of the next couple of years at over a 40% compounded annual growth rate. And so, tremendous opportunity. It’s a question of how do we address that opportunity and enable our customers to extract that value. So, these AMRs and these other computer vision applications are really challenged by the need to provide powerful AI and camera-based inputs, but in very small form factors. And AMRs, in particular, may need to process data from multiple different cameras, as well as proximity sensors, so that they can navigate safely around their environment.

So if we look at what Advantech is doing, they’ve got a couple of offerings that leverage the 13th Generation Intel Core mobile processors to address what’s needed for both compute and graphics-processing performance, but also the power-efficiency needs of automated applications that are being used in AMRs, as well as an optical inspection. So, each of these solutions really benefits from the fact that you can get adaptive performance from the 13th Gen Intel Core mobile processors that feature that performance-hybrid architecture, but also that intensive graphics processing that we get from those Iris Xe graphics integrated, as well as the memory support that we get from DDR5.

And then, last but not least, they certainly benefit from the really great power efficiency in the latest processor generation. And that also contributes to helping our customers improve their total cost of ownership, and focusing on areas that are important to AMRs, like longer battery life to boost operational duration of robots on the factory or the warehouse floor. So, just a lot of really exciting innovation going on across these different edge and IoT use cases.

Christina Cardoza: Absolutely, and I can’t wait to see what else partners come up with when they’re powered by the 13th Generation Core processors. Just talking about the AMRs—autonomous mobile robots—this is just like, I can’t believe we have these robots operating across factory floors, helping out in the production line. And it’s all thanks to Intel technology, and it’s only going to get smarter and better from here.

But, unfortunately, we are running out of time. Before we go, I just want to throw it back to you one last time if there’s any final thoughts or key takeaways you want to leave our listeners with today.

Jeni Barovian Panhorst: Yeah, absolutely. You know, as we’ve discussed already, there’s a huge diversity of use cases and deployment models across network and edge computing infrastructure, and Intel’s product portfolio needs to comprehend all of those needs. Our mission really is to deliver the hardware and software platforms that enable infrastructure operators and enterprises of all types to adopt an edge-native strategy. And we’re really guided by those priorities with the goal of delivering that workload-specific performance and truly leadership performance for our customers at the right power at the right design points, servicing all of our customers’ needs, and really ultimately improving their total cost of ownership and their value.

And so we need to meet all of these design points across the spectrum—whether we’re talking about the devices themselves, the edge infrastructure, the network infrastructure, the cloud—our customers also want to be able to scale their software investment in whatever parts of our portfolio that they’re using. And so we’re really focused on this mission of being a catalyst for that digital transformation, and improving that business value. We’re focused on driving and democratizing AI, and making it accessible across the full ecosystem. So, with these latest 13th Gen Intel Core processors, we’re really proud to be delivering that next generation of diverse, edge-ready processors, and giving our customers more choices in leveraging this hybrid microarchitecture to unlock all these possibilities. So I’m really excited to partner with everyone here in the audience to realize the full breadth of these technology innovations, and really the promise of the future that’s built on AI-enabled edge computing.

Christina Cardoza: Absolutely, and I think we talked a lot about edge in this conversation. I see the intelligent edge being a huge trend, not only this year, but the next couple of years. And so it’s great to see how Intel is supporting that and helping organizations reach their goals and really realize the full value of their operations. So, thank you so much, Jeni, for joining the podcast. It’s been a pleasure talking to you and a great conversation.

Jeni Barovian Panhorst: Thanks so much. It’s been great.

Christina Cardoza: And thank you to our listeners for tuning in. Until next time, this has been the IoT chat.

The preceding transcript is provided to ensure accessibility and is intended to accurately capture an informal conversation. The transcript may contain improper uses of trademarked terms and as such should not be used for any other purposes. For more information, please see the Intel® trademark information.

This transcript was edited by Erin Noble, copy editor.