Christina Cardoza: Hello, and welcome to the IoT Chat, where we explore the latest developments in the Internet of Things. I’m your host, Christina Cardoza, Associate Editorial Director of insight.tech. And today we’ll be talking about how augmented reality is transforming the healthcare space with Shae Fetters from Novarad and Dr. Michael Karsy from the University of Utah. But before we dive into our topic, let’s get to know our guests. Shae, welcome to the podcast. What can you tell us about yourself and your role at Novarad?

Shae Fetters: Hi, thanks for having me. Currently I’m VP of Sales over the Western United States, but really I work across the whole nation, and internationally as well, helping physicians like Dr. Karsy feel comfortable using this technology and providing support to them as needed. So, that’s a major part of what we do here, is helping these doctors be comfortable with this new technology and helping it benefit their patients and results.

Christina Cardoza: Absolutely, and of course the conversation is just strengthened by having Dr. Karsy here so that we not only will learn about what this technology is and how it’s being implemented in hospitals, but we can actually learn about the benefits directly from doctors. So, Dr. Karsy, welcome to the show, and please tell us more about yourself.

Dr. Karsy: Thank you so much for having me, Christina and Shea. So, my name’s my Mike Karsy, I’m an Assistant Professor of Neurosurgery, Neuro-Oncology, and Skull Base at the University of Utah, and got really interested in augmented reality as I was looking at different imaging modalities for surgical treatment, and heard about Novarad, which is just an incredible company, homegrown out here in Utah, out in American Fork. And we’ve been collaborating together on various research projects, and sort of moved augmented reality towards the clinical space and found where the applications lie and try to develop the technology together. And it’s been just a great partnership with the company, and to learn from them and be able to help us get implemented for treating our patients.

Christina Cardoza: Can’t wait to hear more about that. I think this is going to be an exciting conversation. Because we’ve seen over the last couple of years huge transformations in the healthcare space already, to mainstream telehealth adoption and remote monitoring. But advanced technologies like augmented reality are enabling even more opportunities, and it’s benefiting both patients and providers. So, Shae, I’m wondering if you could set the stage for us and kick off the conversation. How is augmented reality transforming this space, as well as what are some of those new opportunities now available with this technology?

Shae Fetters: I think we’re just really on the forefront of this evolution. We really don’t understand the full depth of what the possibilities are at this point. Dr. Karsy has a vision of how this can be used; we’ve got a different vision as well that helps merge everything and make it possible.

Currently we’re looking at—we take medical imaging—simple X-rays and CT scans and MRIs that patients get that doctors use to help diagnose what’s going on. And we’ve been using that technology for a lot of years at Novarad. We’re now taking that and merging that with AR in order to let those doctors, those surgeons and proceduralists, have that technology at their fingertips doing these procedures. We’re not looking to replace what they do, we’re just looking to give them little bits of extra information to make those procedures go smoother and more seamlessly, to get better results for those patients and get them back to their lives quicker. So really we’re looking at it in huge—a lot of different realms—whether it’s a highly complex surgery, whether it’s something routine that would be made safer by having that imaging on hand and ready to use.

We look at it also as patient education. We find that a lot of patients, they’re still—they’re worried about what’s happening during a procedure or what the doctor’s trying to explain to them. And I think sometimes there’s this disconnect, and doctors do the best they can to try to explain what’s in their head and what they understand of this world of our complex bodies to the patients. And augmented reality helps give the patients a vision and see exactly what it is that surgeons are talking about, and it helps them feel more comfortable and more part of their care. And that helps get better results all around if patients are understanding those things and feel comfortable with that.

We also see it in training new physicians, both in surgery and their clinics, streamlining that. And we’ve done some studies with Dr. Karsy that show augmented reality in training—it substantially increases the effectiveness and the quickness of the learning. And that’s really just really touching the surface of what the possibilities for AR are in the healthcare space currently.

Christina Cardoza: Yeah, it’s amazing to hear, because when I think of augmented reality, it’s typically consumer-focused or gaming and, of course, technology has just advanced so much and it’s bringing all these new possibilities, like you just mentioned. And Dr. Karsy, you mentioned this a little bit in your intro, but I would love to learn more about how you heard about the use of augmented reality in operating rooms. What made you come to Novarad, and what are the benefits you’ve been seeing so far?

Dr. Karsy: Yeah, so, augmented reality is pretty new and a lot of times hard to even just explain what it is to a patient or another physician even—what is it that you’re actually seeing? And image guidance in neurosurgery has been around for quite a long time. It started at a very rudimentary level and it’s essentially now standard of care, where we constantly use a guidance system to allow patient DICOM images, MRIs, or CT scans to be used intraoperatively to help with surgery.

And so, as I was hearing about augmented reality, almost to see if it was—where it was at in the healthcare space—you hear about it in gaming and in commerce and other industries—as I was looking around the healthcare space I came across Novarad and reached out to them. And being in Utah as well, I thought it’d be interesting to work with this with the group. And they were way ahead of everybody else that I could see. I mean, they had a technology that was FDA cleared for preoperative planning, as well as intraoperative use for spine instrumentation. We worked on a number of cadaver projects; we published a paper in The Spine Journal on accuracy of medical instrumentation with augmented reality. And then we published another paper looking at accuracy of ventriculostomy drain placement in the head using augmented reality, and just had some really impressive results from both of those papers and our discussions with other physicians.

And so, for me, I thought this could be kind of the next step in imaging for patient care. I mean, I could see already the benefits of navigation for surgical care in spine in making things minimally invasive, as well as in cranial applications where you can really hone in on complex cranial anatomy, and I thought that this is just going to be the next step.

And I think Shae alluded to something which was quite interesting is that most surgeons as they get more developed, they develop essentially a mind’s eye kind of X-ray vision of patient anatomy and what they expect to see in surgery. But that takes years to develop—you know our residency training is seven years long. If you do additional fellowships, that’s an additional year. That’s just neurosurgery. And if you think about every other surgical specialty has all this other anatomy and nuanced instrumentation and procedures to learn, that each field becomes more and more complex. And what I thought with augmented reality was you could essentially take these holograms and DICOM images, exactly what you see in two-dimensional space, and apply it directly to a patient right in front of you.

And so we’ve shown patients their images, we have used it for presurgical planning and trying to kind of manipulate the hologram in a position that a patient would be positioned in surgery and kind of see if your mind’s eye is able to better recognize the features you’re looking for. And we’ve also used it in surgery to help identify lesions. And what Shae mentioned is that we really are at the beginning of how augmented reality is going to be used in healthcare. Like we don’t know the best applications for it, we don’t know exactly where it adds the specific value for specific cases because this technology is so new. All those things are yet to be discovered, and it’s really, really exciting to be able to work on it and see where it’s going to go.

Christina Cardoza: I’m sure, and I’m sure it’s also important to have a partner like Novarad that, when you think about these new applications and possibilities, having a partner that you can work with to really make those dreams a reality. And so you mentioned that sometimes it’s even hard to describe this to patients and let them understand what it is you’re actually doing and how it works. So I’m wondering, how do you get patients as well as your staff comfortable with using this technology? How do you implement it in the current learning or operating structure you have?

Dr. Karsy: Yeah, I mean you’re basically asking how does it fit into the current workflow. It’s developed. Basically my partnership with Novarad has been over a year, and we can see this technology continuously change and develop in how—basically ease of use, the verbal commands you use to use the system and be able to record videos that you could just show patients and kind of demonstrate things that way. There’s a lot of features that have been added over the last year that I’ve been working with them.

In terms of their clinical workflow, the Novarad company has the—it’s basically a cloud-based system. So, there’s software that they have allowed me to install here at the U to be able to upload images to their cloud-based system, generate these hologram images, and then you can download them directly to our headset just by Wi-Fi—like you don’t actually need a terminal system or computer sitting at your site, you basically just need internet access and you can get this thing up and running.

So that’s been kind of exciting, and I’d say our staff is definitely not yet comfortable with this. I think it’s so new that they don’t—we basically—I set it up for them, and if a surgeon’s telling you that they’re setting up imaging, that means that it’s going to be easy to set up, because surgeons are notoriously short of patience and don’t want to work on getting things set up. But if I’m setting it up and able to do it, and I basically do that and show our staff like, “This is what the hologram looks like. This is what it looks like on a patient.” This is kind of the first time many of them, many of our staff have worked on patients for decades, and they can finally kind of see in three dimensions sometimes what these tumors will look like, and it’s kind of incredible what they—we’ve always had our staff kind of be wowed whenever it’s the first time they see what augmented reality actually looks like.

Christina Cardoza: Yeah, and it sounds like it may be a little less invasive to the patients also. So, I want to nail down a little bit into the technology components that actually go into making this possible. Dr. Karsy mentioned cloud-based software, among other things. So, Shae, I’m wondering if you can expand on what are some of the hardware or technology components that go into this, and how Novarad is making AR-guided surgery possible.

Shae Fetters: Really it comes with a core foundation of the company. The company as a whole has been dealing with medical images and providing these picture archival systems for hospitals for about 30-plus years. So that’s been a huge part of the foundation of knowing how to use these images in the first place. We’ve been doing that on computers with doctors for a lot of years. We’ve been able to change what the monitor is now for these doctors, where we’ve been able to condense it down to these headsets.

That’s been a really tricky task that’s come with a lot of trial and error over the last—we’ve been working on this particular project for about seven to eight years now. Part of that has come from some of the amazing talent that we have in house—some of the computer programmers, the AI developers, and things like that. Some of it’s come from just the vision of the CEO of our company; he’s done an amazing job as well. Being able to condense it down so that all that you need in the operating room is this headset that Microsoft makes has been pretty amazing.

Really it has come from years of working with that technology. Like Dr. Karsy talked about, all you really need once you get into your surgery is a Wi-Fi connection. We’ve got it to the point where you can look at a simple QR code, and it downloads your patient’s study onto the headset in about 20 seconds or so. And then to link the technology, to link that to the patient—if that patient has special codes put on them worth a scan—again, that whole process to download, to calibrate that lens, to let it learn the space that it’s working in that day, and then match the images to the patient’s anatomy accurately it takes a total of about two and a half minutes at this point.

And Dr. Karsy talked about this a year ago—that was not the case. We’ve continued to develop with a great partnership with him on figuring out better workflows so that this isn’t as scary and complex for surgeons and different hospital staff, but trying to get the right mix of flow so that everybody’s comfortable using these. And so it’s not a big deal when the doctor says partway through a case or before a case, “Hey, grab my headset, we’re going to guide this with VisAR.”

So that’s been really great to continue those things, and we’re continuing to refine that. It’s not quite to the point where it’s absolutely amazing, but it’s pretty mind-blowing at this point that we are able to do that without having multiple pieces of huge equipment in the OR. I think Dr. Karsy talked about that: currently with traditional navigation at this point this becomes standard in the operating room. It actually takes several pieces of equipment to put into the OR suite. And those OR rooms, they seem to become smaller and smaller with all the equipment that needs to be packed in in order to provide the right care for these patients.

Christina Cardoza: So, you mentioned VisAR and that, of course, is the Novarad solution that Dr. Karsy and his team are leveraging to make this possible. And you also mentioned working with Microsoft and this headset; the power of the partnership with Dr. Karsy and his team; as well as maybe some of the other equipment and manufacturers you’re working with. You know, the power of partnerships is something big on insight.tech. We’re seeing that to make all of these things that we envision for the future possible it really takes the partnerships that you’re talking about. And I should mention insight.tech and the IoT Chat as a whole, we are Intel® publications. And so I’m wondering if you can tell me a little bit more about how you work with partners like Intel as well as Microsoft, and what really is the value from Novarad’s view working with them?

Shae Fetters: I mean, it’s huge for us to be able to do everything in house to develop the complex—really the power of the microchips and the power of the HoloLens—that whole hardware end of things, there’s no way Novarad as a company—we’re only about 150 people right now; we’re a fairly small company by industry standards, which is pretty amazing that we’ve been able to produce this type of technology with that, but that’s only been possible through different partnerships.

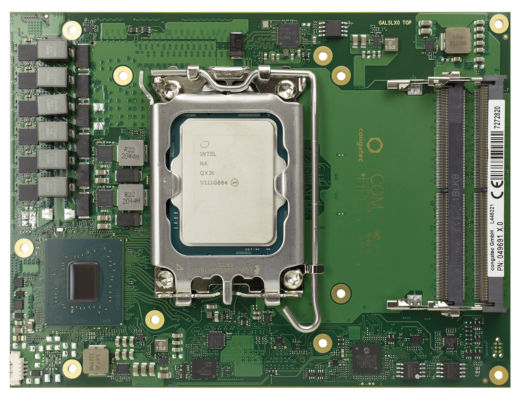

I mean, Microsoft has an amazing headset that they continue to develop as well. We started with their first version several years ago, the HoloLens 1. And thanks to innovations in that, we’ve been able to do additional things once they released the HoloLens 2. Different possibilities that the first version couldn’t handle. But for us to really spend the resources and the time to develop the headset on top of all the software, I don’t think we could ever keep up with Microsoft. The inside parts of that headset, the chips by Intel, I mean we don’t produce microprocessors here, that’s not what we do. So it’s great to have a collaboration with companies that also have some great innovative minds and some amazing talent.

We do work with other companies because we’re not looking to change what these surgeons do, necessarily. Dr. Karsy might have a certain set of instrumentation that he likes to use in his procedures, whether it’s cranial, where his focus is, or spine, where he also does procedures. We’re not looking to lock surgeons into only what we want them to use. We just don’t think that’s very fair, and it really doesn’t lead to the best patient care overall. And so we try to work with most of these companies that come to us and say, “Hey, we’d love to partner with you so that we have a collaboration.” And that’s been amazing for us.

And really we couldn’t have done it without brilliant minds like Dr. Karsy. And there’s a lot of doctors across the country that are excited about this and share their different ideas. And it’s very much an open and collaborative network that we’ve got that we’re working with. There’s not competition between us, and there’s not competition between the surgeons even; they want what’s best for patient care and moving healthcare forward. It’s not a selfish venture.

Dr. Karsy: I was going to definitely agree with what Shae said. You know, from our perspective working with industry has been a great partnership as e no way one single academic center is going to develop 30 years of experience within PACS and imaging and DICOMs like Novarad has, and then develop augmented reality on top of it.

I think that we’ve, from a clinical standpoint, really focused on what we do well, which is the surgery part and the clinical part and that management, and partnering with the group that really has expertise in radiology and augmented reality—that’s kind of the way to innovate. I think we’ve have really good success by doing it that way.

Christina Cardoza: And of course surgery is such a delicate process, I can see the importance of working with big technology giants like Microsoft and Intel, making sure that the technology that goes into this, the performance is fast and optimized and you’re getting all this information in real time because that’s really going to count. And I just know that Intel chips and processors are getting better and faster every year.

And so, Dr. Karsy, we mentioned a couple of other different transformations in the introduction, like telehealth and remote monitoring. Of course this does not compare to the augmented reality portion that you can bring into surgery and operations, but I’m wondering how this technology compares to some of the advancements that you’re seeing in the healthcare space, or how it even compares to the traditional way of things that you guys have done.

Dr. Karsy: Yeah, thank you. I mean, I think what’s interesting is the technology as it develops—and we talked about the Intel chips—and as it becomes stronger, better processing speed, and is able to handle more operations, it generates newer technology that can do more. Well, that’s kind of what we’re seeing in healthcare, is as soon as we have this new technology—a better HoloLens, a better augmented reality system, software that works faster—it changes everything you can do. You have now the ability to go back to the procedures that we used to do the same old way for decades, and you can now do them differently; you can do them minimally invasively with smaller incisions; you can get more accurate.

I think AR overall, if you look at the different spaces, and I’m by no means an expert in AR, but when I’m looking at different spaces like gaming or commerce or healthcare, healthcare has this incredible impact right away. And it’s been using images for decades; it’s like the perfect setup for seeing this technology be implemented, it really is. The other technologies in healthcare, at least in the neurosurgical space, really focus around becoming less invasive. So, they have to do with spine instrumentation that you can do minimally invasively, technology to minimize the size of craniotomies or even eliminate craniotomies.

If there is a day that I didn’t have to do another brain surgery that would be a great day, because it would mean that we have developed enough medical therapy and radiation, and other things that you can avoid needing surgery, and it will take a long time to do that. But that’s the ultimate goal of this, is to reduce the harm to patients as much as possible, and technology drives the way forward, one hundred percent.

Christina Cardoza: And of course we mentioned that this is only scratching the surface; this is just the beginning of what this technology can do and what we hope it will do in the future. So, I’m wondering what hopes do you have for AI-guided surgery or advancements in technology in the healthcare space in the future and going forward?

Dr. Karsy: From my standpoint I think that imaging technology has been widely used in neurosurgery and we can see how navigation has changed the way we do things. And a lot of times when we collaborate with other surgeons and they come into our wards and they see our navigation systems, they’re wowed by it because such a thing doesn’t exist in many of these other surgical fields. It hasn’t—we haven’t had the ability to do that. I think AR will change all that.

I think you’re going to start to see image guidance and minimally invasive procedures enter into other surgical subspecialties and spaces in healthcare that it didn’t exist before because we just didn’t have a way or need to do it. And now we have a technology that can potentially aid those other fields. So I think that will change everything, lead to new procedures, techniques, all sorts of things. And this will be a many-decade kind of development; it’s not going to be all at once, but that’s what I see happening.

Christina Cardoza: Of course, and Shae, how do you hope VisAR and Novarad in general will be a part of that future, and how will you continue to bring more opportunities and possibilities to the healthcare space?

Shae Fetters: Yeah, we’ve got a similar vision to Dr. Karsy in that. We think of the new technologies that come about in healthcare and how it changes things. I mean, I just think a lot, just alone of X-ray and CT and MRI, and how we can put people in this little machine for a couple minutes and then we can tell from these black and white pictures what’s going on inside them without needing to open them up and look at those things physically. I mean, that was a pretty revolutionary thing, very less invasive.

We see AR as a similar move in this realm of this new wave of how we treat patients, and the information available to surgeons and patients alike so that there’s greater understanding. And Dr. Karsy will probably speak to this as well—there’s a huge collaboration between surgeons and their patients as well that we don’t really talk about much, but that patient ultimately has responsibility for their care as much as the doctor does. And when that patient does understand more because it’s not this foreign black-and-white image of an MRI that, really, I don’t remember how many years it took me to learn how to read CTs and MRIs, because it’s such a foreign language. AR, it makes it much more comprehensible, I mean much more understandable for not only patients, but also new physicians coming out.

We really do see this as in the next 10 years or so that this is just a standard of care that every surgeon, every doctor has their own headset and it’s a core part of how they treat patients and how they interact with them.

Christina Cardoza: Yeah, that’s a great point—making this technology and healthcare in general more accessible and consumable for patients is going to go a long way to making this technology really take off and be mainstream adopted. Unfortunately, we are running out of time today, but this has been a great conversation. And so, before we go, I just want to throw it back to each of you for any final thoughts or key takeaways you want to leave our listeners with today. So, Dr. Karsy, I’ll start with you.

Dr. Karsy: Thank you again, Christina and Shae, to have the opportunity to speak with you guys. I’d say with AR technology, it’s one of those things that the more you learn about it, the more you’re going to start to envision where else could this be applied? And I think in healthcare that’s really exciting because there’s still so much that’s unknown. We’re learning so much every day in every field. And to have new technology that allows you to then continue to come up with new discoveries and implement them and, as Shae said, teach our patients better and make it more intuitive, that’s great. I think that’s the way things should be going forward, and I’m really excited to see this continue to develop in my career.

Christina Cardoza: Absolutely. And Shae, any final thoughts or key takeaways you want to leave the attendees with today?

Shae Fetters: Yeah, I just think that this really is an amazing technology that we’re coming across. And really trying to explain this to people, it’s difficult to find the right words. When you can put a headset on somebody’s head and within 30 seconds to a minute—now I always watch their faces when I do this, when I talk to new doctors that have not used this technology at all. And when I walk them through some of the basic function of this, every time within 30 seconds to a minute I see their eyes open big and they understand; they start to see the potential possibilities out there. And so I encourage any of these physicians, any of these big, I mean these healthcare systems that are looking to improve their patient care to reach out it. It’s worth a conversation on how we can collaborate to provide better care for all our patients in our communities.

Christina Cardoza: It’s great seeing how this technology is being used today, and I can’t wait to see what else you guys come up with in the future. I just want to thank you both again for the insightful conversation and joining the podcast today. And thanks to our listeners for tuning in. If you liked this episode, please like, subscribe, rate, review, all of the above on your favorite streaming platform. And, until next time, this has been the IoT Chat.

The preceding transcript is provided to ensure accessibility and is intended to accurately capture an informal conversation. The transcript may contain improper uses of trademarked terms and as such should not be used for any other purposes. For more information, please see the Intel® trademark information.

This transcript was edited by Erin Noble, copy editor.