Immersive retail is what physical retail stores need as they compete with the convenience of online shopping. In 2020 alone, the United States saw a headline-making 43% increase in e-commerce sales and has continued to see a gradual rise since.

“Recognizing the competition, in-store retail is evolving to differentiate itself from its online counterpart,” says Matt Tyler, Vice President of Strategic Innovation at Wachter, a technology integration and services company. “Retailers are looking to create a destination that lures customers back in.” And holographic technology is advancing immersive retail experiences to do just that.

Wachter is leading the way with its Proto Integrated by Wachter solution, an innovative display system for creating, managing, and distributing holographic content. It enables brands to beam their live and previously recorded content from a multimedia studio or mobile app.

From there, the PROTO cloud beams the content to device locations, even thousands of miles away. End users can interact live with people via displays at those locations through a 3D holographic projection that enables unique, impactful guest experiences.

The Wachter solution, Proto Epic, has three components: a standalone seven-foot unit with a transparent LCD monitor and a light box, a studio kit to create content, and a Live Beam kit with a 4K camera to beam content into any Proto device anywhere in the world. “The experience can be made even more immersive by integrating lighting and audio systems,” Tyler says. “It’s really meant to draw in all the senses.”

From shoppers looking for personalized immersive retail experiences to celebrities interacting with fans, Proto’s holographic display and capabilities allow for two-way interaction in real time.

Advancing Retail Insights Via Augmented Reality

Experiences that wow are part of the appeal of holographic teleportation, but the technology is about more than just theater. Using Proto, retailers can gather valuable intelligence that can increase revenue. An Intel® RealSense™ camera embedded in each unit can anonymously track shopper traffic and behavior. The Wachter integration crunches these records to “extract valuable data that marketers are looking for,” Tyler says.

The Proto unit can also dynamically change its content depending on the audience. A loyalty card swipe can yield even more tailored material by connecting, for example, online shopping behavior with in-store beamed content.

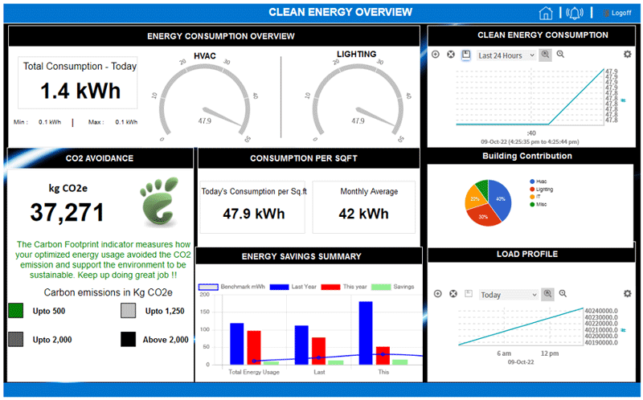

Wachter deploys Proto to scale business and provide end-to-end solution integration for the customer. A team of certified experts design, architect, procure, install, and maintain power and cabling needs across venues. The integrator also helps customers manage their content and drive shopper analytics—analyzing metadata from video feeds—and pipes this information into a dashboard for easy visualization. Proto uses AI to sift through the video analytics data and customize streamed content in real time.

Intel® technology drives data processing on the Epic unit as well as in-studio production of content. “There’s an Intel high-performance processor that is capturing all the video and syncing that back with the units themselves out in the field,” Tyler says. “The cloud, used for storing and redirecting content, also uses Intel architecture. Intel is the common glue between all the different pieces of the solution.”

Holographic Technology Offers Endless Use Cases

Proto’s ability to have 3D projections of sought-after experts or celebrities into stores is a draw for an adventure retailer with close to 60 locations. The retailer’s biggest points of sale are in Manhattan, where they are set to use Proto to beam outdoor guides from more rural areas. A one-on-one consultation with a guide gives a different meaning of the term “virtual shopping,” according to Tyler. For instance, customers get to see what kinds of flies fish would bite on, or the clothing to wear for the weekend’s outdoor conditions—and could potentially increase the average spend per customer.

From #shoppers looking for personalized experiences to celebrities interacting with fans, #holographic capabilities allow for two-way interaction in real time. @WachterInc via @insightdottech

A fashion retailer plans to use Proto’s Epic alongside shelves of apparel. The apparel is to be part of a fashion show streamed through the unit. For each piece that the model wears, the corresponding item on the shelf will be highlighted with special lighting. “It’s a fun way to take the guesswork out of the buying process and allows people to make a decision much faster,” Tyler says.

Tyler expects different types of hologram technology to gain traction in other sectors as well. A potential implementation is to help government officials meet with global representatives while keeping travel to a minimum. “We see the collaboration, the communication, the energy savings, all coming together,” he says.

In another use case, a museum is exploring Proto as a way of demonstrating artists’ work. In higher education, a professor in Massachusetts can give a lecture that can be beamed to students in different campuses around the world. Because the hologram technology is bidirectional, students in turn can participate.

“The capabilities to create that three-dimensional experience and facilitate a conversation at the same time, the way you ingest content is what is transformative,” Tyler says. “I don’t think any other technology can deliver quite that capacity right now.”

This article was edited by Christina Cardoza, Associate Editorial Director for insight.tech.

This article was originally published on November 3, 2022.