Christina Cardoza: Hello, and welcome to the IoT Chat, where we explore the latest developments in the Internet of Things. I’m your host, Christina Cardoza, Associate Editorial Director of insight.tech. And today we’re talking about smart and sustainable buildings with Graeme Jarvis from Johnson Controls and Sunita Shenoy from Intel®. But before we jump into the conversation, let’s get to know our guests a little bit. Graeme, I’ll start with you. Welcome to the show.

Graeme Jarvis: Thank you, Christina. And pleased to be with you again, Sunita. My name is Graeme Jarvis and I’m Vice President within Johnson Controls’ global digital solutions business, where we are all about helping our customers realize their smart, sustainable business objectives within their built environment. My role is commercial-leadership focused. And so I engage with our large-enterprise customers globally and key-partner enablement programs, with Intel being a great example.

Christina Cardoza: Great to have you, Graeme. And I should mention before we get started that the IoT Chat and inside.tech as a whole are sponsored by Intel, so it’s always great when we can have somebody from the company represent and contribute to the conversation at hand. So, Sunita, welcome to the show. What can you tell us about yourself and your role at Intel?

Sunita Shenoy: Yeah. Thank you, Christina. And it’s my honor to be on this chat with Graeme and yourself. I’m Sunita Shenoy, I’m the Senior Director of Technology Products in Intel’s network and edge computing group. My organization in particular is responsible for technology products, requirements, and roadmaps as it relates to the industrial sector. Industrial sectors include manufacturing, utilities—buildings is an infrastructure within that sector from an energy-consumption perspective, as well as housing these critical infrastructures. So, my organization’s responsible for bringing the silicon products as well as the software technologies required to enable this transformation into smart infrastructures.

Christina Cardoza: Great to have you, Sunita, and I love how you both mentioned this idea of meeting business objectives within the environment, talking about buildings, which is the topic we’re talking about today, smart buildings. So, obviously remote work has taken a huge force in the last couple of years and it’s here to stay, but people have started to return back to work and started to return back to the office in this hybrid work environment, where not everybody is in the office all the time and not as many people are coming back to the office. So that gives a little bit of a challenge for businesses to figure out how to make the best utilization of this space. And you mentioned it, it has great energy consequences with it too. Do we need to be having all the lights on and all of these things operating in a building that’s empty at times, half full, and not to capacity. So, Graeme, I’ll start with you. What are the challenges businesses have to think about now in regard to their physical office space as people start returning to work in this hybrid environment?

Graeme Jarvis: Sure. It’s a great question, Christina. And it’s so relevant right now. COVID actually served as a catalyst for what we’re now going through, which is the new normal. So, what does hybrid work environment mean? I think there are two key components to that. One is around the people, be they employees, guests, building owners. And the other is around the built environment itself and how the built environment needs to adapt to the new normal, which is really as, as we see it, around sustainability, workplace experience, and then safety and security within the built environment.

So, before COVID-19 I think we’re all familiar with the fact that most of us worked at an office almost every day, and the pandemic proved that we can actually be productive from our home office or on the road, whether our home office is nearby, within the same country, or even abroad. That has been proven. So now the challenge is on the employee side for a hybrid work environment—what would that mean to me? I would like it to be appealing. I’d like it to be easy to go in and out of work. And so how one might—how might one do that? And it gets into key enabling technologies, touchless technologies, and having a sense of control over that.

We happen to have a solution called OpenBlue Companion, which is an app that allows employees and guests to do hot desking, book conference rooms, pretreat those conference rooms based upon the number of people that might be in there for a particular meeting. There’s cafeteria integration, parking and transportation integration, so that when one goes to the office it’s actually a pleasant experience. On the building side, the hybrid work environment is really financial. How do I optimize the footprint I have, and what am I going to need moving forward to support my employee team? And that’s where we are right now, is companies are trying to rationalize what they have and what they will need.

So, some of our solutions enabled by compute and AI from Intel, for example—we are able to understand what is in motion today, and give an assessment for a client around what they have and the efficiency of those solutions today based upon the outcomes they’re trying to realize. Then they have an objective. They would like to be more productive. They would like to reduce expenses. They would like to have a safe, sustainable workplace. So now you’ve got interdependencies around the heating, ventilation, air conditioning system, the number of people that happen to be in a building through access-control information—the time that cafeteria should actually be preparing food based upon the workload of people that are in the building. And all of this is interconnected now. And so there’s an optimization routine that starts to present for management around: What should my environment look like? How should it look in the future? And what we’re seeing today is a template for the building of the future. People are rationalizing and optimizing on what they have, and they’re taking lessons learned and starting to apply it for their “building of the future” —be it stadiums, be it ports and airports, be it traditional office space.

Christina Cardoza: Yeah. You bring up a lot of things to consider when going back to work. And I want to come back to OpenBlue and how you actually make these buildings more energy efficient. But, Sunita, I’m wondering, from an Intel perspective, what are the implications you’re seeing of a hybrid work environment as it relates to the business and energy usage?

Sunita Shenoy: Yeah. So, from, as Graeme was stating, and the working from home became the new norm in the last three years. But as all companies, all businesses are easing their workers back to work—be it hybrid work or remote work or on site—they have to make it comfortable for the workers coming in by having frictionless access, right? You don’t walk in and open the doors because now you need it to be safe from bugs, right? So you make it frictionless. You use advanced technologies like wireless connectivity, you use advanced technology like AI to make it easy for improving the quality of your workspace, whether it’s your hybrid desk or whether—how you find your rooms, or whether the building, if the building is retail, for example, how do you find your way around without being in a crowded environment, right?

So, making it easy to use data and AI and technology such as wireless and mobile for workers to ease into the workplace because they sort of got comfortable being in their own spaces, right? In fact, a lot of the stories I’ve heard is, okay, my office at home is more comfortable than my office at work. So how do I make my environment at work as comfortable and safe for them as it is in their home? So that’s really the implication, and technology can play a big role in implementing these solutions. But deployment is one of the key areas that we need to focus on, is how do we make it easily deployable using solutions like Johnson Controls solutions with our technologies?

Christina Cardoza: Absolutely. And it comes to my attention that there may be even larger implications. Say if you have a building where there’s multiple different businesses—it’s not your business that owns the building. And I think that brings up the question of who’s in charge of making a building smart or reducing the energy consumption. And is it the building owners, or is it multiple businesses within the building? So, Graeme, can you talk a little bit about how buildings can become more energy efficient, and who’s really in control: businesses or building owners?

Graeme Jarvis: I would start off by saying most businesses have an ESG—or environmental, social, and governance—plan or a set of objectives. Johnson Controls does. I know Intel does. And these are used as a means to communicate value-based management practices and social engagement to key stakeholders. So, employees, investors, customers, and potential employees also. We at Johnson Controls, we’ve adopted a science-based target and net zero carbon pledge, to support a healthy, more sustainable planet over the next two decades. So our efforts align with the UN sustainable development goals, and to date since 2017, where we indexed, we’ve reduced our energy intensity by about 5.5%, and our greenhouse gas emissions by just over 26%. And we have a plan to get to carbon neutral as part of our 2025 objectives, realizing that that carbon neutral state will take longer, but that is part of our ESG plan.

So the reason I mention that is once you get into the built environment, somebody owns that building and they’re going to have something to do with a sustainability footprint objective because, one, it’s the right thing to do. But, two, the economics are motivating businesses to act because you can be more efficient, thereby saving money. So how would one do that? We help in that regard because buildings account for about 40% of the planet’s carbon footprint. So if we want to go and start talking about how to solve sustainability challenges, the building, the built environment is top of mind. It’s close to the top in every study.

So, once you’re in, you’ve got certain equipment that’s running: heating, ventilation, and air conditioning systems. You have multiple tenants within that building. They all typically pay a fee for the energy consumption for the space they use, but it’s relatively binary, or it’s a step function based upon historical patterns. But what if you could give them insight to what their real usage could be based upon seasonality factors, how many people are in the building, when they’re in the building, when should I treat the air because I’ve got a meeting room that’s booked, and you give them control.

And some of our solutions through OpenBlue help enable clients to understand what is actually going on in their environment and where are areas that they can improve. As soon as that data becomes available and there’s a financial consequence or a financial reward, then behavior starts to change. And that’s where it comes back to, how do you enable against that behavior that you want? And then you get into the hardware, the software, the compute and AI that Johnson Controls can help with and Intel can help with. But it really starts with that ESG charge. And the fact that buildings are a large opportunity from a sustainability-improvement standpoint.

Christina Cardoza: When you think about how much energy and carbon emissions buildings give off, like you just mentioned Graeme, about 40% of the carbon emissions, I can see why businesses are setting such aggressive sustainability goals to reduce that. And, Sunita, you mentioned to be able to tackle this problem and make a dent, you really need to deploy the right technology to get the data points from all of those different systems that Graeme was talking about. So, can you talk a little bit more about the technology that goes into these businesses, making a dent towards those efforts?

Sunita Shenoy: Yeah. Yeah. I think Graeme touched upon some of these, right? So it’s not just now because of hybrid and pandemic that we are realizing this, but this is a known fact, right? That the carbon footprint is generated—emissions are 40% to 50%, I don’t know what the actual numbers are—but commercial and industrial buildings contribute to a vast majority of the carbon emissions, right? So it is our corporate social responsibility whether you’re a building owner or a business owner. It is our responsibility to reduce that carbon footprint, right? So the technologies that you can use and we have used is, one, is AI is becoming more advanced through the advancement of sensors, right? How to collect data, to how do you bring this data into a compute environment where you apply AI to learn from and analyze this data to infer that information?

So we can be a more—automate the whole process. For example, in the past the building managers in multiple buildings—I mean, I’ve interviewed several across the industry, the facilities manager or building manager, what they would do is they would use manual processes where 8:00 to 5:00 you keep the HVAC running or keep the lights running, regardless of how the building is utilized, right? And that generated X amount of energy consumption in the buildings, right?

But once IoT became a reality over the last seven, eight years or so, we started to put sensors in there; to use daylight savings; we automated the process of using AI to see the utilization of the building. And based on the utilization, you would turn the lights on or off or HVAC on or off or water consumption—whatever it is, right? And that reduced the amount of energy used in the buildings, right?

So, small steps first, right? First, connect the unconnected, assess the data in the buildings—which is a treasure trove of data there—analyze where you can drive the energy-consumption optimization. The first place to start is lighting or HVAC. Then you go on to the other consumptions as your computers that are plugged in—or it could be your water utilities—collect all the data and start analyzing it and start optimizing where you want to start reducing the energy optimization. So it’s not just about today and pandemic and hybrid. This has always been the process ever since IoT became a reality, and AI and advanced technologies became a reality. It is very feasible. And we at Intel Corporate Services have already accomplished a huge task in reducing our carbon emissions.

Christina Cardoza: I can definitely see, with all the different systems and data coming in, the importance of AI to be able to manage and analyze all that data quickly to make business decisions. There’s also a lot of different systems outside of the buildings. You know, there’s the parking lot, parking lot lights, there’s everything inside. There may be a cafeteria. So there’s all these different systems that we want to collect data from. How do we connect all of those different systems that may not have touched each other before? Graeme, do you want to answer that one?

Graeme Jarvis: Sure, I’ll start Christina. And I’m sure Sunita has some great insight also. You hit upon a great word, “system.” I like to use a swimming pool analogy, where historically the security manager was in a lane. The facility manager was in a lane. The building manager was in another lane. And products were sold into those owners, if you will, that had a certain part of the building under their, his or her, responsibility. The way to look at this problem is really as an integrated system. So that’s why, when we talk about smart, connected, sustainable buildings, you’ve got to get the building connected, which is now happening.

And now you’ve got all of these data from these edge devices that are doing their core function—security, heating, ventilation, air conditioning, the building-management system, smart parking, smart elevators, etc. When you pull all of this together, now the benefit is you can start to figure out patterns and optimize around the heartbeat of what that building should be, given what it’s capable of doing with the equipment that’s in place and the systems that are in place. So this is a journey. This is not something that can be done overnight, but the beginning is to assess what you have. And then that’s one end of the spectrum.

And then look at where would you like to be three, four years from now from an ESG perspective. And then you have to build a plan to get from where you are to where you would like to be. That’s most of our customers’ journey today. When we do that, the assess phase is really eye opening because the data is objective, it’s no longer subjective. Well, I think this might—it’s pretty crystal clear. And then you can use AI and modeling with building twins. We have OpenBlue Twin, for example, to do “what if” scenarios: If I change this parameter, what might that do to the overall efficiency of the building? And so now you can start to harness the information that was latent, but now it’s at your fingertips. So that’s some of—that’s some of how we help our clients in that journey realization.

Sunita Shenoy: Yeah, if I can build on that, Christina, from a technology standpoint, right? In any given building there’s a disparate number of systems, right? Could be an elevator system, a water system, an HVAC system, a lighting system, a—how your computers are connected together, all of it, right? And they all come from different solutions, different companies. Our advocacy in any—we try this with multiple industries and transformations—is focus on using open standards, right? If everybody’s building on open-standard protocols, whether it’s connectivity or networking, then you are working off the same standards. So when you plug and play these different systems, you are able to collaborate with the different systems, however disparate they are, right? Share the data, bring it to a common place, information sharing on common protocols. Networking is super critical in bringing all these disparate systems together.

Graeme Jarvis: Absolutely right. For example, OpenBlue. Part of the name “OpenBlue” is “open.” We are open because no one company can do this alone. Hence, we have such a great partnership with Intel. So, open standards; we can push information to third-party systems. We can ingest information from third-party systems, all to advantage the customer for the applications that give them the outcomes they’re looking to realize. So this is actually a critical point in industry. If people that are listening to this podcast are talking to folks who have a closed architecture or a closed approach, I would just caution some pause, and think more on the open and scalable and partnership-oriented approach, because that’s where things are going. And it’s extensible with firms that are yet to present, but we would love to partner with, because they’ll have some novel capability that will advantage our customers.

Christina Cardoza: I love the point that you both made about being able to leverage some of the existing technology or systems you have within a building. I think sometimes we get a little bit too quick to jump on the latest and greatest new technologies, or to replace the systems that we do have. So it’s important to know that there are systems out there that can connect some of these disconnected systems that we have, and you don’t have to rip and replace everything. It’s still working. There are ways that you, like you mentioned Graeme, that you can work together. I want to paint the picture a little bit more for our listeners. We’ve been—there’s been a lot of great information, but I’m wondering how this actually looks in practice, especially with OpenBlue. So, Graeme, do you have any customer examples or use cases that you can provide of how OpenBlue helped a building become smarter, connected, and sustainable?

Graeme Jarvis: Sure, I have a few. I’ll share a couple. So, one is a company called Humber River Hospital. They’re out of Toronto, Canada. And what we are helping Humber River Hospital do is we’ve entered into a 30-year agreement with them to help improve their energy consumption by approximately 20 million kilowatt hours per year. And how we’re doing that is understanding their environment, layering on top our OpenBlue Solution Suite, and leveraging the built environment cadence to optimize, to refine, and then optimize around that for a multiyear engagement. So this is about a 20-year engagement.

The benefit to the client is they have a predictable financial roadmap, and they’ve got leading technology that’s going to help them realize that predictable financial outcome. And we also then help certify that they are indeed attaining those targets from a LEED standpoint and a corporate-stewardship standpoint. So that is one example.

There’s another example with Colorado State University, out of Pueblo. This is around renewable energy supplies for 100% of their energy demand on campus. And it’s a 22-acre solar array that is being completed. And then we’re overlaying our capabilities, hardware and software, and our professional services, including OpenBlue, to help them realize that 100%-green objective.

Christina Cardoza: Thanks for those examples. And I want to go back to a point you made earlier about how not one company can do this alone. Partnerships are essential to meeting our sustainability goals. So, Sunita, Graeme mentioned a couple times the importance of their partnership with Intel. So, I’m wondering what are the sustainability efforts at Intel, and how have you been working with partners like Johnson Controls to meet those goals?

Sunita Shenoy: Yeah, so there is an initiative that Intel calls RISE, which stands for responsible, inclusive, sustainable, and enabling, right? Responsible, meaning that we employ responsible business practices across our global manufacturing operations, as well as how we partner with our value chain and beyond, right? Inclusion is about advocacy for diversity and inclusion in the workforce, as well as advocacy for social-equity programs on making sure that, for example, the food is equitable in the community. The sustainability, which is the focus of smart buildings, is not just from a corporate social responsibility perspective. Our buildings and our operations, our corporate services are—have taken a commitment, which is by 2040 to achieve 100% renewable energy across global operations, as well as achieve net zero greenhouse gas emissions. And from a product standpoint, the products that Intel brings to the marketplace—our microprocessors and edges and silicon and the software—is to increase our product energy efficiency 10x from what it is today, as well as enable our value chain to employ these—this energy efficient processes so the electronic waste doesn’t contribute to the greenhouse emissions. So those are some of the things that we are doing as a corporation to address sustainability goals.

Christina Cardoza: Great. Thanks for that, Sunita. And you mentioned a couple of Intel technologies in there. Graeme, I’m wondering, you talked about the value of the partnership already with Intel, but what about the Intel technology? What are you leveraging in OpenBlue, and how has that been important to the solution and to businesses?

Graeme Jarvis: First of all, I’d be remiss if I didn’t mention, before I get into the technology, what the value Intel brings to our relationship is. It’s all about the people. Intel has a great employee base and a great culture. They’re a pleasure to work with, from their executive leaders to their field teams. So it starts with the people. So, I want to make mention of that because that’s critical. Next would be the depth of expertise that they bring to a client’s environment, especially on the IT side. This complements our world in Johnson Controls because we’re more on the OT side, but the IT and OT worlds are converging because of this connected, sustainable model we’ve been talking about in business reality.

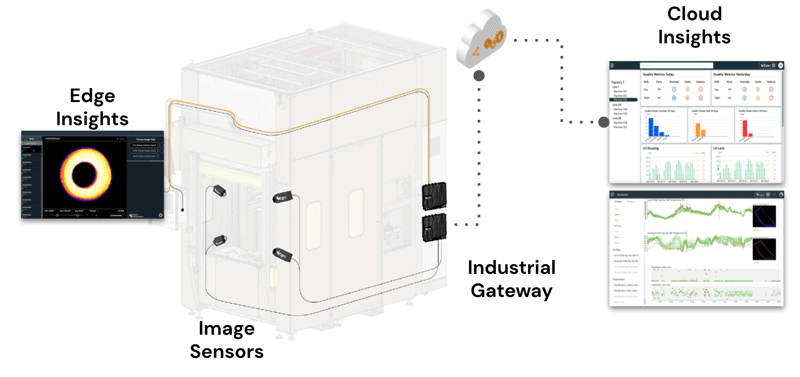

And so between the two of us we can solve for a lot of customer challenges and outcomes they’re looking to realize that neither of us could do independently. Intel silicone hardware, their compute, their edge and AI capabilities—they really help us bring relevant solutions, either from a product standpoint, because it’s embedded with Intel compute and capability, or they actually enable some of the edge capability that we bring to our clients’ environment through OpenBlue. I also want to mention, on the cyber side, going back to the IT and the OT side, Intel has great capability on cyber IT. We’ve got great capability on the OT cyber side. When you talk to a client, they’re looking for an end-to-end solution. And so that’s another area where we’re better together and we’re better for our clients together.

Christina Cardoza: I always love that, that saying of “better together.” It is a big theme over here at insight.tech, especially working with partners like Intel. I think you gave our listeners and business owners and building owners a lot to think about as they try to meet the sustainability goals that they have. Unfortunately, we are running out of time, but before we go, I want to throw it back to each of you quickly to give any final takeaways or thoughts you want to leave our listeners with today. So, Sunita, I’ll start with you.

Sunita Shenoy: Yeah, so what I want to say is the barrier in adoption or deploying a smart building is generally not the technology, because the technologies exist, right? The solutions exist. The barriers is the people and the decision to employ the smart building solutions, right? So, we’ve learned along—we’ve learned a lot of things over the last several years since the conception of IoT and now edge computing, right? So it is very feasible to deploy. I think the mindset of people needs to shift and, as Graeme was saying, the IT and the OT worlds need to collaborate by bringing the best practices of both together to solve these deployment challenges. Look at those challenges as opportunities.

Christina Cardoza: Absolutely. And, Graeme, any key thoughts or final takeaways from you?

Graeme Jarvis: Yes. Just one, a macro one. So, it’s all around just saying that there’s a tremendous opportunity before us as we look to address the sustainability challenges that we discussed on this program. It’s global in nature, and that’s going to require global leadership at all levels to be successful. It is hard to find meaningful—work that is meaningful because it provides a good economic benefit while doing good for our planet. This call to action around buildings I think is one of those. So, if there are any people that are looking for work, I would encourage them to take a look at the smart, sustainable building sector. It is part of the new frontier. It requires a lot of different skill sets that are complementary. And if anyone is a customer on this podcast, I would encourage them to take a look at Intel’s websites for the solutions that they can afford, and take a look at Johnson Controls websites, and we would love to come and help you. So, thank you.

Christina Cardoza: Absolutely. Great final takeaway to leave us with today. And with that, I just want to thank both of you for joining the podcast. It’s been a pleasure talking to you, and thanks to our listeners for tuning in. If you like this episode, please like, subscribe, rate, review—all of the above on your favorite streaming platform. Until next time, this has been the IoT Chat.

The preceding transcript is provided to ensure accessibility and is intended to accurately capture an informal conversation. The transcript may contain improper uses of trademarked terms and as such should not be used for any other purposes. For more information, please see the Intel® trademark information.

This transcript was edited by Erin Noble, copy editor.