It’s an exciting time to be an end user. The customer experience—of retail, hospitality, banking, dining, entertainment—is evolving before our very eyes. And the past couple of years have only accelerated changes already in the works. But what about businesses that provide those customer experiences? Many of them have had to make changes on the fly to stay competitive and been forced into digital transformations they weren’t always prepared for.

Fortunately, the goal of AI technology company meldCX is to help customers blend legacy systems with the latest technology to create an omnichannel experience, and get the most out of their data.

We talk with Co-Founder and CEO of meldCX Stephen Borg, and Chris O’Malley, Director of Marketing for the Internet of Things Group at Intel®. They discuss the challenges of digital transformation, the demand for frictionless interactions, and how the power of computer vision can serve both the omnichannel experience and human engagement.

How are customer experiences evolving across different industries?

Stephen Borg: The whole COVID situation has really accelerated the curve of change. Our customers need to increase the level of service for their customers, without having as many resources—either budgetary or staff—to do it with. But when their customers venture out, they expect a higher degree of service; they expect a demonstration of cleanliness; they expect a higher degree of engagement.

So how do you do that while either reducing costs or using fewer resources? Businesses are starting to turn to technology for the elements that are not customer facing and redirecting those resources towards creating great experiences. They’re asking: “What are the opportunities to reduce friction, create automation, but increase engagement?”

How well are businesses adapting to these changes?

Chris O’Malley: All the trends and challenges that retail was facing prior to COVID still exist. Three years ago people were talking about frictionless; millennials and other digital natives are used to engaging with technology as opposed to humans. There were also inventory and supply challenges before COVID. But the level of growth towards frictionless was slow; it was nice to have this technology, but it wasn’t absolutely necessary.

What we’re finding now is that the companies that started to invest in this type of technology prior to COVID, now that COVID has hit us, are the ones that are doing really well. But many companies went in to COVID with almost no one-to-one digital contact with their customers. Now they have this massive digital relationship with them, but if they don’t know how to deal with that data, if they don’t know how to personalize with that data, they’re really struggling.

How are tools and technologies helping customers deal with challenges?

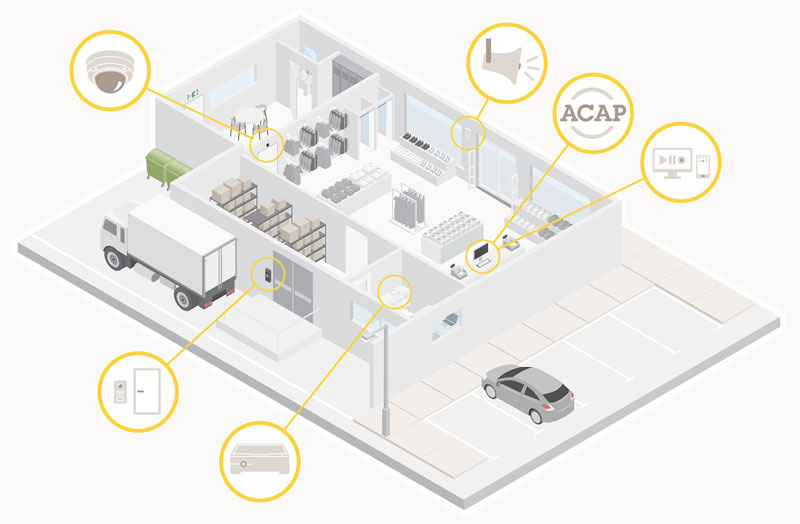

Stephen Borg: When we started out with meldCX, we wanted to create a solution out of the box, one where you could simply plug and play. No need for data scientists, no need for a massive team to stand up the most common aspects of computer vision—analytics, tracking, inventory.

Secondly, we found a lot of customers that were, say, three-quarters through a project where they had existing investment in some models. Now they can pump those models through meldCX, using OpenVINO™, and mix them with other, complementary models that we have. Or, thirdly, we can create a model for a customer that has a specific use case or a specific problem to solve.

“Businesses are starting to turn to #technology for the elements that are not customer facing and redirecting those resources towards creating great experiences.” – Stephen Borg, meldCX via @insightdottech

And now we’re finding that the level of engagement is cross-business. Unlike the past, where we might have had an IT stakeholder or a marketing stakeholder, now we have everyone at the table. We advise customers to start with computer vision, to start with out-of-the-box modules, and then go from there. We want them to understand that it’s really an amplification of their existing capability. Then our team works with them to really drill down into that problem-solving phase for future growth.

Chris O’Malley: Another big thing is right in the name, meldCX—melding the old technology with the new. Say a customer doesn’t want to do the full investment into a new camera setup right now—they might have security cameras already in place. So, meldCX can take those feeds right away, load some models on them, and get basic data right from the get-go. That way, the customer doesn’t have to do a massive investment to start getting data. And we find that once customers start to see what computer vision can provide, then they’re interested in investing further. They see the power of it.

And we’re right at the beginning at this point. The corollary to Moore’s law is that technology also becomes cheaper every single year. But when you start adding compute to everything, there’s going to be an immense amount of data. So you need technology like this to get started making those valuable, actionable insights for your company.

When we talk about a retail omnichannel experience, what does that mean?

Stephen Borg: There’s been a lot of focus on mobile and a lot of focus on web, but we haven’t seen a lot of connecting mobile to web in a single, seamless experience at the in-store or physical contact point.

It could be connecting the computer vision to a locally occurring event. For example, if you go to a self-service device and it knows you’ve used it multiple times, it won’t go through all the instructions again. It’s little, subtle things done anonymously that create convenience and context.

How would you suggest that businesses get started on this omnichannel journey?

Chris O’Malley: In the last 10 to 15 years, online advertisement has really eaten up a lot of the market share—largely because behavior could be tracked that way. But when someone goes in-store, there’s none of that information. With the technology that meldCX is offering, that computer vision is offering, you now have that ability to figure out: Is my campaign working? Did it actually influence people? Were they engaged with it? And you can make changes. Before the advent of computer vision, that option didn’t really exist.

That’s pretty powerful. And now that information can start to be monetized. But the retailer or the business can also figure out how it might change a display, for example. Or how it might change associate activities. I’m in marketing, and we always say that 50% of the money we spend is useless, 50% is valuable. We just don’t know which one is which. With the technology that meldCX has, we’re starting to be able to figure that out in-store. What’s valuable? What’s not.

Stephen Borg: For example, we work with a retailer that has started monitoring the bay you see when you first walk in the store, and monetizing that based on the customer touching a product that’s on the shelf there. So instead of vendors paying to be displayed in that front-of-store bay, they pay for every touch of an individual product that’s located there. And then monetizing it like a website.

What’s the best way to analyze and process data to make more-informed decisions?

Chris O’Malley: There’s a huge amount of very valuable data out there that goes unused. One reason for that is that a lot of retailers—legacy retailers in particular—have very siloed data. The POS data is in one place, the kiosk data in-store is over here, mobile data is over here, there’s online data over here. And the data is never shared between them.

When you move to an edge-based or microservices-based architecture, you can have shared data, or a data lake—that’s when you can start to make sense of all of it. But you’ve also got to make sure that the data is standardized.

The other thing is big data analytics. You might look at pieces of data and think there’s no correlation, no relation. But if you see them over and over on big data, you might figure out that actually, yes—product A does influence product B. It all comes down to making sure that data is shared by all the different apps.

How do you team up with partners to make all this possible for businesses?

Stephen Borg: There are multiple types of data, and some of it is immediately actionable. For example, we work with a large hotel chain, and when data points to the fact that the front desk has hit a threshold and needs to be cleaned, we push that data through an intermediate alert; the hotel uses a Salesforce communication app to notify the staff. At meldCX we don’t necessarily store that data; it’s immediately actionable.

There’s also historical data or multisource data that we’re trying to get insights out of. We work with Intel from an OpenVINO perspective to make sure our models are optimized. That means less heavy infrastructure at the edge, which significantly reduces cost, and that’s great.

We also work with partners such as Microsoft, Google, and Snowflake to provide customers the data set in the way they want to consume it. We have a suite of dashboards that can be used depending on the person’s role in retail: you sign on, which pulls your persona, and we’ll give you the data that’s relevant to your role.

Chris O’Malley: Another thing is that with a lot of activities in a retail store, that information may be wanted in absolute real time, and so it needs to happen at the edge. We mentioned earlier that compute is getting so cheap that companies are adding more and more of it. But their data is growing significantly faster than the cost of connectivity to the cloud is reducing. So this type of thing has to be done at the edge. And Intel with OpenVINO is very much optimized for using edge capability to do the inference and get those real-time analytics that are needed.

Stephen Borg: We don’t send any video to the cloud. We strip out everything we need at the edge to reduce the cost, and, more importantly, for privacy reasons. That way there’s no instance of any private data going into the cloud or going through our system. It’s all stripped out by that edge device and OpenVINO.

What do the end customers think about all these changes?

Stephen Borg: If it’s all done with privacy in mind, customers respond to it quite well. They’re getting through checkout more quickly, or staff members have information that’s relevant to them, maybe even tailored to them. And we’re finding that if you’re providing a frictionless experience, staff members can focus beyond just the transaction—on a conversation, or on some type of real engagement. We’ve found that, particularly in some countries with strict lockdown conditions, in some cases shopping has become even more social because when people do get out, they want to be engaged.

Any final takeaways on the omnichannel customer-experience journey?

Chris O’Malley: Customers want a frictionless experience and a personalized experience. You can have the parts of shopping that everybody still loves, but you can also bring in the benefits of the online experience by using all of these tools. There’s also the ability to replace human resources in some instances, because the worldwide labor shortage is real; restaurants can’t fill up all of their seats because they don’t have enough staff. The same thing is happening in hospitality and entertainment venues. If you can automate some of the things that were formerly done by staff, you can keep those valuable human resources for the things people really like—the interactions.

Focus your human resources and your human talent on those interactions that really drive experiences, and all the stuff in the background—all the operational aspects, all the inventory, all the insights—set those up with computer vision and automation. That’s what it’s really good at.

Related Content

To learn more about latest innovations from meldCX, listen to our podcast on The Power of Omnichannel Experiences with meldCX and Intel®.

This article was edited by Erin Noble, copy editor.