Environmentalists everywhere have circled 2050 on their calendars. By then, scientists say we will have to have stopped, if not reversed, the effects of decades of greenhouse gas emissions. That is, if we hope to stabilize the Earth’s climate.

The alternative is bleak, but for these efforts to succeed, we need cooperation that scales from the smallest, most remote edges of our environment to some of the largest multinational corporations in the world.

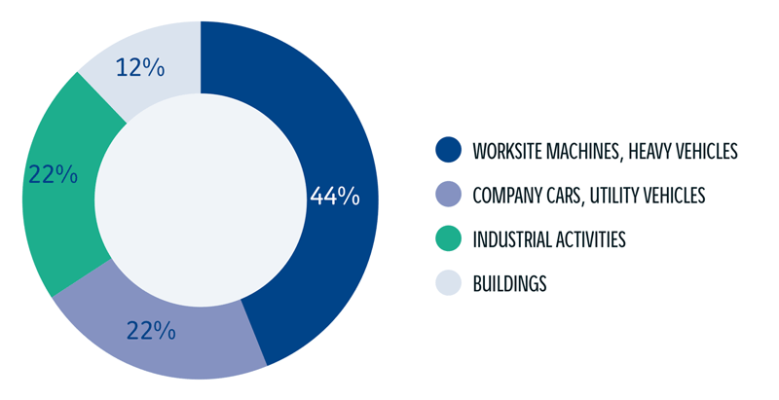

For instance, VINCI Energies (VE), a French energy infrastructure provider that develops technology solutions for building, factory, and IT customers worldwide, is working toward becoming carbon neutral by mid-century. The company consists of 1,800 specialized business units across 55 countries and manages about 400,000 projects each year. Needless to say, their carbon influence is immense (Figure 1).

It hopes to reduce its carbon emissions 40% by 2030. To do this, they have adopted an “Avoid and Reduce” strategy, based on a plan consisting of three scopes. The first focuses on emissions from buildings or offices. The second focuses on gas-powered service vehicles. And the third, still in development, will include long-distance travel.

But they’ve realized that setting clean-energy goals and achieving them are two very different things.

To avoid carbon-intensive activities in some places and reduce emissions in others, efforts must first be able to track and monitor the environmental impact of what the company is doing. The sheer size and variation of use cases addressed by VE made this challenging, as off-the-shelf carbon-monitoring solutions with the scalability and flexibility it required simply don’t exist.

“The main challenge most companies have is automated #data ingestion that provides accurate, up-to-date information when visualizing your own #carbon emissions.” – Natali Velozo, Head of Operations, @AxiansGlobal via @insightdottech

The capabilities VE was looking for included the ability to:

- Paint an accurate picture of CO2 emissions at the company, organization, and business unit levels

- Analyze data from third-party agencies like fleet management, garbage handling, transport, and other service providers

- Offer actionable benchmarks that allowed improvements to be measured

Avoid and Reduce Sustainability Initiatives in Action

Fortunately, the company was able to find a path to build its ideal solution internally. VINCI Energies leveraged Axians IoT Operations, a VE subsidiary that designs industry PaaS offerings around its microServiceBus.com device management solution.

microServiceBus.com is a protocol-agnostic device management solution that runs small-footprint software agents on IoT gateways. These agents communicate sensor data from edge nodes using protocols like Modbus, mBus, OPC-UA, LoRa, Bluetooth, IEC 61850, and others to- and from the cloud.

With microServiceBus.com as a foundation, Axians IoT Operations proceeded to build the GreenEdge PaaS-solution, a real-time environmental footprint reporting system. GreenEdge is currently being used by VE Sweden to automatically update the three scopes with real-world data from IoT sensors and other business systems. The solution is built on the Microsoft Azure IoT Hub, but integrates with major cloud platforms like AWS, Google Cloud, and IBM Watson.

“The main challenge most companies have is automated data ingestion that provides accurate, up-to-date information when visualizing your own carbon emissions,” says Natali Velozo, Head of Operations at Axians IoT. “For example, VE’s management structure, with different regions and business units and divisions, is a complex structure, and being able to see different emissions on different levels depending on your needs was quite a challenge.”

The GreenEdge platform not only can eliminate manual importing of data, it also provides stakeholders the opportunity to visualize and respond to carbon emissions indicators in real time.

“We developed GreenEdge to be able to follow different customers’ management structures and aggregate data depending on your role and what you need to see,” Velozo explains.

Carbon Emissions Data at the Green Edge

Despite the flexibility and scalability of the GreenEdge platform, carbon emissions data doesn’t originate in the cloud. It is created at the edge.

microServiceBus.com agents run on hardware targets that host Ubuntu and Yocto Project Linux distributions, Arm mbed, Docker, or Node.js runtimes. In the VE GreenEdge deployment in Sweden, the agents reside on hundreds of Intel® Next Unit of Computing (Intel® NUC) for Industrial mini-PCs. The rugged edge gateways provide a cost-effective solution for IoT applications that demand 24/7 operation, and natively support Intel® vPro technology for remote monitoring and management.

They also host either an Intel® Celeron®, Pentium®, or Core™ processor to deliver the performance scalability needed at the edge.

“Edge gateways are designed to have delegated workloads that otherwise would have been processed in the cloud. The reasons for that are because of large volumes of data or requirements such as low latency. Therefore, our preferred gateway is the industrial Intel® NUC, and that’s something we can use for video processing and machine learning,” Velozo explains.

Bringing Sustainability Online for Everyone

VE and Axians IoT realize that emissions aren’t limited to just air pollution. Therefore, they have built provisions into GreenEdge to support water conservation and have another goal of recycling 80% of company waste, which includes treating and recycling 100% of hazardous waste.

But the biggest impact of the VE’s clean-energy efforts likely won’t come from the company at all. As a utility infrastructure provider, the organization is at the core of energy decisions for a customer base it estimates has a carbon footprint 25x greater than its own.

While humbling, without action its potential is terrifying. But more than anything, it represents a vast opportunity to bring sustainability monitoring online to clean up life at the edge. And everywhere, for that matter.

This article was edited by Christina Cardoza, Associate Content Director for insight.tech.