As more cities pursue climate action plans, a new vision has captured the attention of building owners, designers, and operators: a smart building that uses energy so efficiently, its carbon footprint isn’t just reduced but eliminated. Toward that end, companies like Amazon and Google have already set aggressive Net Zero goals—hoping to transform their buildings into eco-friendly machines that don’t consume more electricity than they produce.

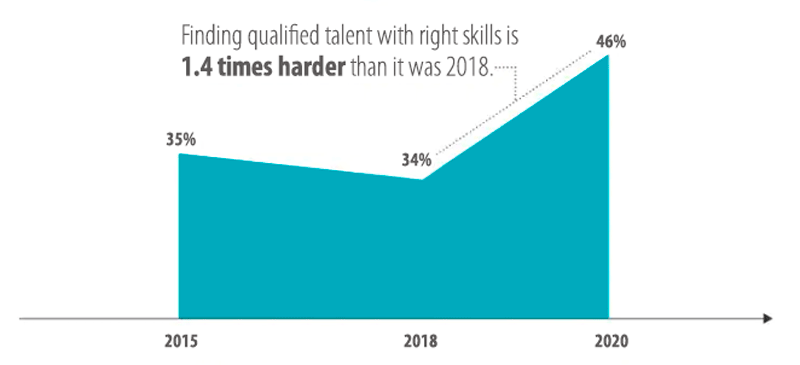

Businesses are equally focused on elevating the environment inside their buildings because they know the productivity of their biggest asset, the workforce, depends on it. After all, employees do their best work when they’re comfortable, safe and in a healthy environment.

Until recently, an energy-efficient building that also meets the needs of its occupants would have been very difficult to achieve. Just think of the costs associated with electrifying and cooling an entire floor of a building for a few essential employees working on a weekend.

But new smart-building technologies are changing the game. Now companies don’t have to choose between a reduced carbon footprint, a safe and comfortable occupant experience, or cost savings. Advanced data analytics and AI-powered insights help them achieve all three.

Net Zero Optimization Depends on Cohesive Data

What is the key to transforming a building from a passive structure to invaluable business tool? A “single pane of glass” that can view, command, and optimize performance across multiple building systems. That is, operators need real-time insights into what’s being consumed, at what rate, and why—so malfunctions and inefficiencies can be identified and addressed right away.

This idea applies not only to commodities like energy but also to space allocation and human capital.

“This holistic picture is really the first step toward optimizing your facilities—whether for Net Zero, employee productivity, or both,” says Terrill Laughton, Vice President of Enterprise Optimization and Connected Offerings at smart-building solutions provider Johnson Controls, Inc. (JCI).

The information this kind of optimization requires is difficult to get in a usable fashion in the traditional building environment, where a mix of systems from different eras and providers typically operate independently. Maintaining these isolated systems and mining their data is expensive and difficult. And after all that, gaining tangible and actionable insights would take a highly trained and experienced building professional.

But when systems are connected and data is translated to a common schema, the information can be shared and combined for new and powerful use cases. For example, an operations manager could quickly understand what the organization’s total energy consumption is—across one building, 100, or even 1,000. JCI’s OpenBlue Platform and suite of applications were designed with these goals in mind.

“HVAC, for example, can be responsible for up to 50% of the overall energy consumption in the commercial-building space,” explains Laughton.

A new vision has captured the attention of building owners, designers, and operators: a #SmartBuilding that uses energy so efficiently, its #CarbonFootprint isn’t just reduced, but eliminated. @johnsoncontrols via @insightdottech

The OpenBlue Enterprise Manager can help customers with green and cost-savings goals to ensure the equipment in that central plant is operating dependably, so they can take pre-emptive action if necessary. “Just running your central plant in a smarter way can cut HVAC energy consumption by 15% to 25% and decrease the overall building load up to 10%,” Laughton adds.

The Enterprise Manager works in part by aggregating data from multiple OpenBlue apps such as Location Manager and Companion. Both apps have real-time occupancy capability, which lets building managers power only the spaces being used. And Companion helps occupants, too, by providing productivity-enhancing tools like wayfinding and personalized temperature control.

Fully Integrated, AI-Based Security Systems

This kind of integration is the real value behind OpenBlue. For example, safety and security systems were once completely separate from other systems. But with the advent of IoT technology and AI, they’ve become another important source of building information, while serving their core purpose.

“With all these offerings on a common platform, security information can be used to improve building operations. And likewise, data from other systems can be leveraged to achieve higher levels of security,” says Sara Gardner, Global Head of Strategy and Marketing, Security, at JCI.

Modern security systems look very different from earlier generations, and of course they do much more. Touchless access controls that use mobile credentials or AI-based video analytics make for a hygienic and frictionless authentication process with multiple benefits.

People can move more quickly and safely through a facility when they don’t have to fumble around with access cards or long lines. And information about their presence can be shared with other smart systems—lighting or elevators, for instance—for improved space utilization, better management of energy, and to improve the occupant experience.

Smart Data Analytics Drive Innovation

OpenBlue is delivered through an as-a-service model with standardized capabilities and applications. Clients can build and develop solutions on the platform to meet their needs, and applications are highly configurable to meet a wide range of uses cases. Customers can combine the appropriate smart-building applications and functionality that will meet their immediate needs and add capability over time as their organizations continue to evolve.

Scaling is easy because all systems are connected via a multilayer, infrastructure-agnostic platform. OpenBlue Bridge, the ingestion layer, collects data from different building systems—thanks in large part to Intel® processor-based platforms at the edge.

Once collected, OpenBlue Cloud applies the specific schema where the relationships between different pieces information are understood. For example, there’s a schema to process how a building automation data point might fit with a security data point, explains Laughton: “And that’s when you can leverage the data and start to do something really innovative with it, Net Zero or otherwise.”

But a single Net Zero building or company is just the beginning. “Just imagine if every building across the United States and the world did the same,” says Laughton. “That would be a huge reduction in carbon emissions, and cost-effective, too, since companies wouldn’t have to rely on electricity purchased from the grid.”

The smart office building, warehouse, or hospital of the future is one that improves business while improving lives. By harnessing the power of AI and advanced data analytics, JCI is building it today.