[podcast player]

The Intel Atom® x6000E series and 11th Gen Intel® Core™ processors—formerly known as Elkhart Lake and Tiger Lake, respectively—are packed with major new features for edge applications. From completely reworked I/O to massively upgraded graphics engines, there is something for nearly every embedded and IoT application.

Join us as we talk to Christian Eder, Director of Marketing at Congatec, about the best ways to deploy these new capabilities. We discuss:

- Why Intel added an ARM processor to its CPUs

- How the new chips enable Time Coordinate Computing (TCC) across distributed systems

- How the upgraded GPUs can power AI applications

Transcript

Christian: We’re absolutely surprised about the performance. This is a single chip system on chip. And it does provide, you really see, at least from the first feeling here, it does provide the performance of the two-chip high power versions from the past year.

Kenton: That was Christian Eder, Director of Marketing at Congatec. And I’m Kenton Williston, editor in Chief of insight dot tech. Today we are taking a close look at the latest Intel Atom and Intel Core processors, formerly known as Elkhart Lake and Tiger Lake. There is so, so much to talk about with these new chips, so let’s get right into it.

Kenton: So Christian, welcome to the show. Can you tell me a little bit about who you are and what you do?

Christian: Yeah. My name is Christian Eder, as you mentioned. Thank you for getting the chance here to talk to you here on a podcast, my first experience. So I’m the Director of Marketing here for Congatec, a company which is dedicated for embedded computer technologies, mainly on computer modules. I’m also quite active here in different industry standards here to standardize new form factors, as I was COM Express, and now I’m COM-HPC. And also on SMARC, so I’m quite active in this embedded scenario.

Kenton: Great. That’s great. I’m going to want to get into a little bit of conversation about some of those form factors here in a moment. But first, I want to get from your perspective, what this whole podcast really is about, which is the new Elkhart Lake series of processors. From your point of view, what’s so exciting about these new processors? What makes them different from what we’ve seen before?

Christian: Yeah, of course, those processors are perfect fit for the computer modules. And also for the single-board computers we make and this new X6000 series of Intel Atoms. Of course, with the smaller structures of 10 nanometer here. It’s got a quite increased compute entity. Although power consumption is still quite low, it’s always shrinking. So we get more performance at the same power envelope, which is great here. So six to 12 watts or so is very good for us. We have four CPU cores, which is good for running things in parallel here, multithreading things. And especially the graphics here with up to 32 GPU cores. So it’s going to be a significant help also towards CII stuff here. Because GPUs can be used not just for graphics here. And of course, a lot of I/Os went quite fast here. So it’s the first time we have PCI Express in generation three. Although next generation or generation two of USB 3.1, and with the UFS 2.0 have faster flash technology on our modules here.

Yup. I think that’s quite a lot not to forget, of course, about the fast memory here. I like to see this feature with this inbound ECC. In the past, we have different modules with ECC RAM, or without ECC RAM. And now you can choose it with this embed ECC, if you want to utilize it. Of course, it costs a little memory space here. Get more security for or you don’t have to, we don’t need different versions here. It’s both are possible here. And for industrial use, maybe the biggest step here of all is real time capabilities. So it’s Time Coordinated Computing. The TCC is implemented here in the CPUs which is really ideal here for rocket, industrial, motion control hardware, whatnot. And it’s also, we utilize CI two to five Ethernet controller, which provides TSN, time synchronized networking. So real-time, not just in computing, also real-time in communication.

And all of this together, of course, is a great platform here, when it comes to real-time operating systems. And even this, let’s say, can be used for… how do we call it, the hardware consolidation. So we the four cores, we can install multiple operating systems, even real-time operating systems, and run those in parallel. We use this real-time hypervisor from our various systems here to utilize this. So bring multiple platforms together on a small low power Atom platform. So all of this becomes possible.

Kenton: It’s quite a long list of things. I think Intel is appropriately positioning this as one of its first really, truly embedded oriented chips, versus what we’ve seen in the past few things that are really good fit for embedded and IoT kind of systems, but have been fundamentally derived from a PC-oriented platform. I mean, the list of features on this thing it’s like, this is clearly something meant for IoT applications. Would you agree with that? This is really a pretty different approach from Intel in terms of how custom-tailored this is for embedded?

Christian: Yeah, absolutely. So you fill it in each and every little feature, let’s say is this thought with industrial use in mind. And the whole feature set is really perfect for industrial users. And you’re right, I mentioned a lot of features. What goes on top always here, when you think about industrial is the extended temperature ranges here. So industrial use is not office use, or if the office a little warm, a little colder, I feel uncomfortable. But if you’re out on the street or in a factory where not, so you have really much tougher environment. And you see this just from the spec here, the use cases, the temperature ranges are for industrial use for 24/7 operation. So that’s the big difference, even if you don’t see it in a first few, if you just look to the features. But let’s say the industrial use conditions are challenging. And this is clearly addressed by this new platform.

Kenton: Yeah, absolutely. And I think some of the other features you mentioned that really stand out to me, one of them is all of the capabilities around real-time. And in particular, around real-time communications. And this is something I think we’re seeing a lot of interest at the moment. Of course, just in general to have an industrial IoT type of application with the critical elements there. Of course, is the communications element. That’s been true for some time. But now that whole concept is really evolving, I think. And we’re seeing a lot of interesting things like what you mentioned TSN, and not even necessarily over existing network, but over new networks, such as, for example, private 5g networks within the factory. So how do you see that feature, or feature set, I should say, perhaps of the real-time communications being utilized?

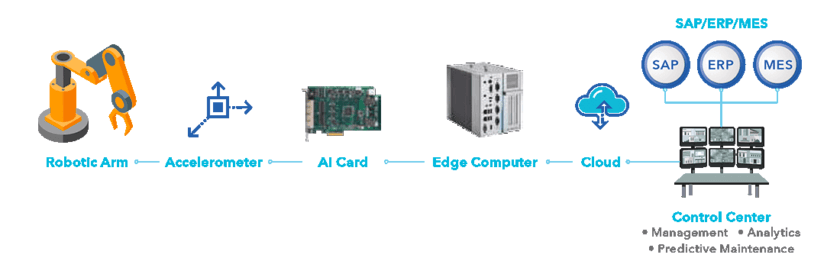

Christian: Yep. We have tons of applications here, when it comes to words, motion control, robot controls, that’s always real-time critical here. But it’s not limited to this use cases. Also, if you want to test the measurement area, so you have to capture the data when it occurs. And there is no second chance to do it. If you miss the sample, it’s lost here. And that’s critical here for a lot of medical applications as well. And in the past, there was a lot of dedicated hardware around to make, let’s say real-time capabilities. Now it’s all built-in and you can do quite a lot. We see this also from everything, which is robotic. We can bring things together.

I mentioned before this real-time hypervisor, where we can bring multiple operating systems together. So we can have the real-time tasks just installed on let’s say, a single core, which takes care about, let’s say, the emotions here of a robot. But a robot, of course, nowadays also needs to have some eyes, let’s say to look around here if we have cameras attached, to do some AI analytics here with the pictures he’s capturing here. And this can be installed in parallel on the other cores. Of course, we still talk about an Atom. So don’t expect tremendous frame rates, but there are so much smaller applications, whereas the Atom performance, which did grow quite a lot, I have to admit. But where this application’s performance level is more than enough.

Kenton: Yeah. Can you give me an example of that kind of application?

Christian: Yeah. I’d say if you go into face recognitions, also. We have a sample here running a real-time pendulum, let’s say keeping the balance and at the same time it says face recognition. Running it on let’s say FPGA accelerated systems, we have about 300 face recognitions per second. But if you only want to recognize, let’s say, is the user in front of me or is this the right user in front of me, just as a terminal operation, you don’t need to have 300 frames a second. So three or five frames a second will do the job easily here. And that’s the type of applications. We don’t analyze, let’s say a whole stadium of persons here with an Atom. That needs different performance levels. But this stuff is possible here on the smaller scale and small applications or point of business, let’s say. How much larger quantities usually sends a really high-end applications.

Kenton: That’s a great example and just to explain to our listeners a little bit about this pendulum demo. This is something that I have seen. I think is a really great illustration of the real-time capabilities. I’ve seen it in the context of older platforms. But the idea here is you’ve got an inverted pendulum. In other words, the axis of rotation is below the weight, which is extended upwards on arm. And so you’ve shown me before in person even a demo of this thing where you can back the pendulum around and the system will restore it to its upright state. And you can do other things in the meantime beyond that balance of the pendulum, really showcasing the ability to do multiple real-time workloads in parallel.

And what I’m wondering is, you mentioned about having the four cores, how the capabilities of the current processor, in terms of its real-time capacity that just generally the performance capability, how does that compare to prior generations, and is this like an evolution or more of a large step up?

Christian: Yeah. Let’s say in between here, it is a performance of about 50% plus or so as that’s of course, you clearly can feel it. But maybe to come back to this little demo you mentioned before. So this pendulum needs to be controlled, and the computer has to react, let’s say each millisecond or even more often, otherwise, it will lose its stability, it will fall down. If you skip some samples here, it will fall down. That’s the thing. This was installed on just one core. Then we used another two cores to do the camera face recognition.

Here, we use the Intel OpenVINO platform, which is great. You can even utilize the GPU to accelerate here the recognition algorithms here. And so it was one more core left and this core was the connectivity core here. This drives the connection here to the cloud and also acts as a firewall to keep communication on a secure level. So I believe this is a quite typical example of what you can do with this four cores using one for secure communication, use another one for your real-time task, and then you have still two more left for your user interface or for your extra computing on top.

Kenton: Yeah, I agree with that. And I think you highlighted something about this earlier, which is the idea that individually, none of these tasks are necessarily all that heinously difficult. But being able to do them all on one platform is quite advantageous in terms of having a system that is lower costs, lower size, lower power, and presumably, could even be a little bit easier to design. Would you agree with that?

Christian: Yes. That’s the whole idea of this hardware consolidation. In the past, it used to be three different boxes being wired up with some cables, and some Ethernet switches and whatnot here. So you can bring all sorts of applications together in one tiny low power box. So there’s no chance to rewire some cables and to switch out the firewall, for example, just for test purpose, and you forget to switch it back on again. All of this is no longer possible here if it’s all together on one platform. And of course, it’s a tremendous safe of hardware cost here. So it’s just one system, much easier maintenance and everything together on one platform. So I totally agree this makes sense. And we will see more and more of those applications. It’s not a new technology. So there are lots of life installation in mission critical applications even. And that’s its job for quite a while.

Kenton: Yeah, exactly, exactly. In some senses, I would say just from the raw CPU point of view, this is a noteworthy improvement. But it seems like a lot of the other capabilities that surround the CPU are really what make this platform interesting. And one of them that you mentioned that the GPU performance has really tremendously improved. And I believe you can take advantage of that with the OpenVINO platform you just mentioned. Is that right?

Christian: Yeah, absolutely. So this gives really a boost here and this allows to operate AI algorithms at a reasonably good speed. I have to say it always depends on the details and on the complexity of the task. But let’s say average task can be performed quite well here. Of course, the GPU can also be used to drive a display. Don’t forget about this one here. And this 4k is fully supported up to 60 hertz, two channels here. So that’s great for Atom platform. But as even more advantage in this AI acceleration.

Kenton: Yep, exactly. We’re not talking about gaming PCs. So the GPU is not important from that perspective. It’s great. It’s great for AI.

Christian: It’s good, but it’s not in the gaming class.

Kenton: Well, I don’t know. You probably can at least do running on this.

Christian: Yeah. I’m pretty sure it will run nicely if you find the version which runs on Windows 10.

Kenton: There you go. I’ve seen it run. You wouldn’t believe all the places that I’ve seen people run. I saw somebody actually put it on a pregnancy tester that had a little LED screen. So people have done all kinds of crazy things there.

Christian: I’m pretty sure it is, but honestly of course, if you have older operating systems. Of course, with newer platforms, it’s not all of the oldest operating systems are no longer supported. And even those operating systems are no longer supported by the operating system vendors. So of course, you have to step up changing here to the latest and greatest hardware platforms. Sometimes you also have to step up with your software levels here, especially with the operating systems. But this is also security point here. It must be done though I think there’s not much way around.

Kenton: Yeah, for sure, for sure. And I want to talk a little bit more, in fact, about that multi-OS approach you just mentioned. Because I think as we’re looking at moving forward with more and more designs that have a lot of complex combinations like the case we’ve been talking about with motion control, some computer vision, some pretty sophisticated communications, firewall capabilities. People will, of course have all kinds of existing equipment in the field that may need to bring over their legacy applications onto this thing. And those legacy systems may be running on a bunch of different OSs. You’ll get these because like we’ve been talking about the existing systems may be in three or four or five boxes. And then of course, people might want to use a more modern operating system for all the reasons you’ve mentioned. So how do you actually go about pulling together all these disparate systems on old and different OSs onto a new platform.

Christian: This hypervisor supports almost any operating system. And if you have, let’s say the pressure or you have no chance to change to a new operating system because maybe your source code from your application was lost or whatnot here, then it’s still possible to run older operating system behind a firewall, let’s say. If it’s a hypervisor system, running an old operating system, and you still can maintain this firewall. So it’s not directly connected to the outside world.

This firewall which might be a small footprint Linux, for example, can of course be kept up to date. So the security part is independently or can be installed independently from the operating system itself because the hypervisor is organized that way that it’s not just the CPUs which are dedicated to see different operating systems. It’s also the resources and the Ethernet controller, which connects to the outside world is also just a resource which will be attached to the security operating system or to this firewall Linux. And from there all other communication to the other operating systems happens by internal virtual Ethernet connectivity, which means none of the operating systems can talk directly to the outside world. Each and every traffic has to pass this firewall application here.

That’s one way to maintain, let’s say even older operating systems if you have no way to work around. Of course, if you can update it to the latest version, it’s better and the better security gives it good security from the operating system itself and improves this with this extra firewall. Of course, it’s just a typical application. You can combine almost any operating systems here together. The only exception I have heard is that you can’t combine multiple windows operating systems. But this is based on Microsoft licenses.

Kenton: Yes, sometimes the lawyers and other technologies are the things that get in the way, right?

Christian: Mm-hmm. Right. In this case it’s not a technical limitation. You’re right.

Kenton: Well, that’s really cool. And I have to make sure I mentioned the hypervisor capability you’re talking about, that’s part of what Congatec offers, right?

Christian: Actually, this hypervisor is created and maintained from a company called Real Time Systems. And it’s an independent company, but it’s owned by Congatec. So it’s not just running on Congatec hardware. It runs on any x86 hardware. But of course, as we do quite intense tests and work quite closely as the engineers to get together, especially in the testing phase of new products, we always ensure that the combination of Congatec hardware and real-time hypervisor runs perfectly.

Kenton: Perfect. That makes sense. So just to kind of recap what I’ve heard so far, when we’re talking about the Elkhart Lake platform, which is also the new Atom 6000 family, and as well as some other Celeron and other brand names that go along with that. So some of the things that make this pretty interesting and different from what’s been around before include, you’ve got up to four cores, with a pretty significant improvement in performance from previous generations. You’ve got, of course, the GPU, which is I think, quite a dramatic improvement and useful not only for creating visuals, but also doing things like executing AI. You’ve got some improved IO, that’s going to be really useful for IoT use cases like PCIe, the faster flash, the ECC memory. And I think all these things are pretty amazing.

I have to say one of the things that is the most surprising to me is that there’s this new thing called the Intel programmable services engine. And I’d like you to explain a little bit about what that is. And it’s particularly unusual and surprising to me in that it’s built around a dedicated ARM microcontroller, which is quite a new feature compared to what we’ve seen before. So what is this programmable services engine about?

Christian: I’m not the engineer behind this. But my understanding is that a PSE is a, let’s say a small ARM controller, which is already integrated in the CPU, which can give a helping hand to do some, let’s say parallel instances. This is something what let’s say, Congatec started to do about 15 years ago. So each and every module and board or equip is a little microcontroller. In fact, it’s a small ARM core in the meantime, which helps here to do some embedded task like system monitoring. So this is completely independent here from the CPU. It helps to boot up, so as to do the power sequencing. It for example, generates the I2C bus, which is not a standard feature of the CPU. And this does not take compute performance from the CPU.

All of this, or the watchdog timer, for example, is completely independent from the CPU. All of this is integrated in this little ARM controller. And my understanding is that you can do same features or similar features, maybe even more here on this integrated little controller here on the PSE. So right now, for us, it’s important that we have the same features as implementations throughout all of our platforms. So right now, we still use our ARM implementation of this which is successful and well known, as mentioned over 15 years. But of course, if so, there’ll be more and more features like this in upcoming new platforms here from Intel. Sure, we think about to bring this to the advantage of the whole system. Right now we even using CPUs here to improve our out of band management, which means let’s say is improve remote control here, or it means the CPU is not completely up to have a certain access here to the CPU for manageability. But right now this is a forward-looking feature, not yet completely utilized by platforms, but I’m looking forward to get more of this in the future.

Kenton: It seems like in a lot of ways, this is providing to your point what would have required previously a companion chip to do the low-level management of the board. And so for example, one of the things you mentioned was the out of band management, which I think is a pretty neat capability here, which allows you to remotely monitor the status and fix things should something go wrong on your system.

Christian: Exactly. And it’s another clear sign. So, this is a typical embedded featured to have a little embedded controller here. And that’s another clear sign for this embedded thinking of this new platform.

Kenton: Yeah, absolutely. Absolutely. I want to go back and talk a little bit more about some of these real-time topics. We’ve talked a bit already about the significance of having all of these real-time capabilities on board, for example, having different cores, doing things in parallel in real-time, a little companion ARM engine, doing other things in real-time. But I think, just as important, and we’ve touched on this just a little bit are the off-system real-time capabilities. In other words, what I’m saying is you’ve got the real-time control, but then you’ve also got the real-time communications elements of it. And I wonder if you could say a little bit more about what your customers are asking for there and what you’re providing. What you see as being new in terms of having that time coordinated computing, being able to do things in a distributed fashion at a cost of factory, or whatever the context may be.

Christian: Yeah, the big advantage of the TS and the time synchronous network is that you can utilize your existing let’s say Ethernet infrastructure, or you can at least use the cables that you have to upgrade some switches and whatnot. Let’s say in a nutshell is you have a standard Ethernet cable, and you reserve part of the bandwidth of this cable for real-time traffic. So we’ve done a demo here at the trade shows where we, let’s say, had a traffic generator, which can really overload the cable. And in parallel we’ve reserved about 20% of the bandwidth. Let’s say it’s 800 kilobits here, just for normal traffic, let’s say, streaming videos, and whatever you can do once on that. And for the real-time control to communicate with the other robots, let’s say from robot to robots, things must be in real-time.

There was a reserved channel bandwidth of about 200K. And no matter how much streaming traffic we put on to it, there was no really recognizable jitter, delay, or it just went smoothly through it, while the other channel was completely overloaded, and the video was no longer running because the channel was so full. So which means you can use the existing infrastructure to bring this infrastructure to a new level to share the existing bandwidth between normal and real-time traffic. And I believe that’s a big advantage over let’s say, a lot of different existing filter standards, which are all towards real-time communication, but all leads are old infrastructure. Here the big advantage of TSN is the existing infrastructure. And we see a big demand since each and every let’s say automation company is working on or already hasn’t implementations here for the TSN.

So it’s still a standard where some details are in definition, it’s not yet completely done. But most of it is done and is implemented and can be used nowadays. So the Ethernet controller itself is one part. But of course, you need to have the rest of the infrastructure Lexus which solely need to be TSN capable. And to be honest, so the complicated part of the whole TSN is in fact the configuration which must happen here on site, depending on your individual infrastructure here. Other than that, as the use of TSN is quite simple. So the demo we set up here for the trade show was not too complicated.

Kenton: Very cool. Very cool. I mean, this all sounds great. But I want to ask about how developers and engineers can get started most quickly and effectively and taking advantage of all these new capabilities. What would you recommend?

Christian: If you want to play here, or start working here with Elkhart Lake or with the new Atom series X6000, of course, the easiest ways is to do it on a single-board computer. So we have implemented this on quite tiny PicoITX board, which is just 72 times a hundred millimeters with two Ethernet ports on to it. It’s just plug and play, you can start immediately. Of course, we offer this also in different other form factors depending on customers let’s say experience or on their design history. So we have it on COM Express. The very tiny COM Express mini also on the COM Express compact here. So two flavors, which let’s say more or less I/Os and more less real estate here for the module. But we also maintain the Qseven standard share, it’s available on Qseven and on this SMARC module standard. If it’s five different implementation of the same form factor, I think there’s one for each flavor here. So it’s a really wide selection offering here.

So I believe the easiest would be starting with the single-board computer with the PicoITX or if you want to start into one of the module form factors, depending on your IO needs, one or the other might be best for you. We offer a complete carrier boards, reference carrier boards, or evaluation carrier boards, where the customer can start immediately to test it. And as an extra goodie on top here, for all these carrier boards, we provide also these schematics to the customers. Based on those schematics, customer can start its own carrier board design very quick and easy and use this as blueprint and add his own stuff he likes or he needs and remove some stuff which is not required for this application. And that’s a quick start into a whole development.

Kenton: And that’s great. So I think this flexible approach would make the boards and modules we’re talking about flexible enough to be suitable to just about any kind of application. But I’m wondering if there are any particular areas that you think would be well suited to these solutions? So I’m thinking, for example, we’ve talked about some like industrial control, some visual applications like digital signage. So what are some of the target applications where you think these solutions would be particularly well suited?

Christian: Of course, a lot of use cases in the medical environment, but even some things you don’t think about it in the gaming industry or even in the audio industry. Now, we’ve a podcast here. This audio recording and audio processing is quite challenging for real-time. So we have in fact customers which provide professional audio equipment based on computer modules here. And of course, they take advantage of this real-time capabilities. So overall, you see these things all over the place, when it comes to, let’s say graphic outputs, something like digital signage in trains, in the airports, whatnot. There is graphics capabilities and the high resolution here of this Atom is quite helpful. Also, the low power consumption is just always helpful here in each and every environment. You name it, the customers usually surprise us.

So the beauty of the computer module is that everything compute-oriented is there and the customization to the single applications or business segments that’s happened on the carrier board. And this is absolutely flexible. But something like a customized solution out of the box or it’s something between one part is out of the box. The other part is customized. But it’s much much easier before doing everything from scratch to utilize one of the existing computer modules here, or even a single-board computer it’s even easier and you don’t have to do a carrier board.

Kenton: Got it. That totally makes sense. So I want to make sure I touch on one other thing which is we’ve talked so far about the new Atom processors but Intel has also announced their latest and greatest in the Core series, the Core 11. Also known as Tiger Lake. So can you tell me just in brief, what is new with Tiger Lake?

Christian: Yeah. For Congatec, it’s a very industrial-oriented company. So the biggest thing is the industrial use case here. It’s the first times that we have Intel Core processors, which are fully specified for minus 40, up to plus 85 centigrade. So this wide range, extended industrial, whatever you call it, temperature range. And this is, of course, on top of all of the architectural and performance levels, this is for us the most important point.

Kenton: Yes, absolutely. All the performance features in the world are not so useful if part can’t take the heat.

Christian: Right, right. Of course, it’s a very good power envelope. And we’re absolutely surprised about the performance. This is a single chip system on chip. And it does provide, you really see, at least from the first feeling here, it does provide the performance of the two-chip high power versions from the past year. So especially the graphics went up quite a lot here, which is, as we mentioned it before although here for the Elkhart Lake which is a very big step to enable a more power-hungry AI applications here on this platform.

Kenton: Yeah, exactly. And so I’ve been seeing from the consumer side of things, reviews of the Tiger Lake, 11th Gen Core family, and people have been very, very impressed with the performance they’re getting out of the graphics engine, really citing it as being just a huge leap in performance from the previous integrated graphics solutions. And so, I would think from an embedded/IoT perspective, I mean, of course, there’s going to be applications like if you have like a video wall or something that’s could be interesting for but also, like you said, from the perspective of using these capabilities for AI using the OpenVINO platform is a big big boost in performance. So like you were saying with the Elkhart Lake where you can do modestly challenging things. I would imagine with Tiger Lake with latest Core, you could do really quite a lot of AI processing on that platform.

Christian: And of course, the IO performance, so it’s the first time we have PCI Express Gen four. So that’s another big step, another double performance step here comparing the Gen three so which already is a great performance here and we get the USB4 with Thunderbolt here. That’s also a tremendous step here in performance what you get in there. Let’s say I’m really proud as being the chairman of the Technical Committee here for COM-HPC. The Tiger Lake here is first COM-HPC board we announced. So actually, it’s a big step to have it on COM Express. Of course, to maintain what’s there. That’s the most successful form factor COM Express ever on the modules, but it’s a first COM-HPC which starts on top of COM Express. So for example, on COM-HPC we have support for USB4. On COM Express there’s no USB4 support possible. So pins are simply not defined, and there’s not enough space to get it out.

Kenton: Yeah. And so I would say, kind of wrap this all together, it feels to me like this is an interesting juncture. I think you and I both have been in this industry long enough that we see periods of the industry where every announcement of a processor is presented as something really revolutionary and this pretty dramatic performance improvements and so forth from generation to generation. Here in the last couple of years, it seemed to me, like it’s been more evolutionary and a little bit less excitement generated around some of the newer processor releases, but I feel like both in terms of some of the individual features like some of the I/O, we’ve been talking about some of the graphics capabilities, this programmable services engine. Now there are some features that individually are quite remarkable, but it feels to me like in a lot of ways these two platforms, Elkhart Lake, Tiger Lake both are culminations bringing together a lot of different important technologies that individually may or may not be that exciting but really together feels like they add up to a pretty dramatic set of improvements. Would you agree with that?

Christian: Absolutely. So it’s major steps here on the low power side with new Atom on the let’s say higher power envelope here, higher performance level here with 11th Gen first-time industrial. And having all of these on modules you can upgrade and bring this to the applications very fast. And this is the whole idea behind Congatec to bring this technology very simple and easy to the customers. Because the most important thing nowadays, I believe is the time to market. So the faster or the better we can support a customer, the fast size application will be at the market and the more successful it will be for the customer and for Congatec.

Kenton: Absolutely. All right. Well, before we run out of time, I should give you an opportunity if there’s anything I haven’t been clever enough to ask you. Is there anything that you’d like to add?

Christian: Actually, we talked quite a lot. I think we went through almost everything I had on top of my mind. So I think with these two launches, so we have a complete refresh here of the whole platforms. And I’m pretty sure each and every customer will find the advantages to step up here to this new technology and performance levels.

Kenton: Yeah, absolutely. I totally agree with that. Like I said, I think just across the board, there’s so many significant new capabilities and features here that there’s going to be something for everyone to really benefit from. So that just leaves me to thank you, Christian, for joining me today on the podcast. Really appreciate your time and perspectives.

Christian: Thank you, Kenton. It was my pleasure.

Kenton: And thanks to our listeners for joining us.

This has been the IoT Chat podcast. If you enjoyed listening, please support us by subscribing and rating us on your favorite podcast app.

We’ll be back next time with more ideas from industry leaders at the forefront of IoT design.